Difference between revisions of "Example Stereo Disparity"

m |

m |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

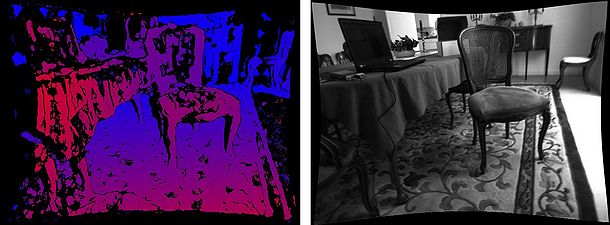

For visualization purposes the disparity is encoded using a color histogram. Hotter colors indicate closer objects while cooler objects indicate objects that are farther away. Cameras must be accurate calibrated or else an error of a few pixels will drastically degrade performance. A common preprocessing step is to run a Laplacian of Gaussian edge detector across the image to provide invariance to lighting conditions. This was not done below because the cameras have their gain synchronized. | For visualization purposes the disparity is encoded using a color histogram. Hotter colors indicate closer objects while cooler objects indicate objects that are farther away. Cameras must be accurate calibrated or else an error of a few pixels will drastically degrade performance. A common preprocessing step is to run a Laplacian of Gaussian edge detector across the image to provide invariance to lighting conditions. This was not done below because the cameras have their gain synchronized. | ||

Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0. | Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0.41/examples/src/main/java/boofcv/examples/stereo/ExampleStereoDisparity.java ExampleStereoDisparity.java] | ||

Concepts: | Concepts: | ||

| Line 18: | Line 18: | ||

Related Examples: | Related Examples: | ||

* [[Example_Rectification_Calibrated| Rectifying Calibrated Cameras]] | * [[Example_Rectification_Calibrated| Rectifying Calibrated Cameras]] | ||

* [[Example_Stereo_Single_Camera| Stereo from Single Camera Example]] | * [[Example_Stereo_Single_Camera| Stereo from Single Camera Example]] | ||

* [[Example_Stereo_Mesh| Disparity to Mesh]] | |||

* [[Example_Stereo_Disparity_3D| Disparity to Point Cloud]] | |||

Related Videos | |||

* [https://www.youtube.com/watch?v=8pn9Ebw90uk&t=672s Stereo Update 2020] | |||

= Example Code = | = Example Code = | ||

| Line 25: | Line 29: | ||

/** | /** | ||

* The disparity between two stereo images is used to estimate the range of objects inside | * The disparity between two stereo images is used to estimate the range of objects inside | ||

* the camera's view. | * the camera's view. Disparity is the difference in position between the viewed location | ||

* of a point in the left and right stereo images. | * of a point in the left and right stereo images. Because input images are rectified, | ||

* corresponding points can be found by only searching along image rows. | * corresponding points can be found by only searching along image rows. | ||

* | * | ||

* Values in the disparity image specify how different the two images are. | * Values in the disparity image specify how different the two images are. A value of X indicates | ||

* that the corresponding point in the right image from the left is at "x' = x - X - minDisparity", | * that the corresponding point in the right image from the left is at "x' = x - X - minDisparity", | ||

* where x' and x are the locations in the right and left images respectively. | * where x' and x are the locations in the right and left images respectively. An invalid value | ||

* with no correspondence is set to a value more than (max - min) disparity. | * with no correspondence is set to a value more than (max - min) disparity. | ||

* | * | ||

| Line 39: | Line 43: | ||

/** | /** | ||

* Computes the dense disparity between between two stereo images. | * Computes the dense disparity between between two stereo images. The input images | ||

* must be rectified with lens distortion removed to work! Floating point images | * must be rectified with lens distortion removed to work! Floating point images | ||

* are also supported. | * are also supported. | ||

| Line 46: | Line 50: | ||

* @param rectRight Rectified right camera image | * @param rectRight Rectified right camera image | ||

* @param regionSize Radius of region being matched | * @param regionSize Radius of region being matched | ||

* @param | * @param disparityMin Minimum disparity that is considered | ||

* @param | * @param disparityRange Number of disparity values considered. | ||

* @return Disparity image | * @return Disparity image | ||

*/ | */ | ||

public static GrayU8 denseDisparity(GrayU8 rectLeft , GrayU8 rectRight , | public static GrayU8 denseDisparity( GrayU8 rectLeft, GrayU8 rectRight, | ||

int regionSize, | |||

int disparityMin, int disparityRange ) { | |||

// A slower but more accuracy algorithm is selected | // A slower but more accuracy algorithm is selected | ||

// All of these parameters should be turned | // All of these parameters should be turned | ||

var config = new ConfigDisparityBMBest5(); | |||

config.errorType = DisparityError.CENSUS; | config.errorType = DisparityError.CENSUS; | ||

config.disparityMin = | config.disparityMin = disparityMin; | ||

config.disparityRange = | config.disparityRange = disparityRange; | ||

config.subpixel = false; | config.subpixel = false; | ||

config.regionRadiusX = config.regionRadiusY = regionSize; | config.regionRadiusX = config.regionRadiusY = regionSize; | ||

| Line 69: | Line 72: | ||

// process and return the results | // process and return the results | ||

disparityAlg.process(rectLeft,rectRight); | disparityAlg.process(rectLeft, rectRight); | ||

return disparityAlg.getDisparity(); | return disparityAlg.getDisparity(); | ||

| Line 78: | Line 81: | ||

* two is more apparent when a 3D point cloud is computed. | * two is more apparent when a 3D point cloud is computed. | ||

*/ | */ | ||

public static GrayF32 denseDisparitySubpixel(GrayU8 rectLeft , GrayU8 rectRight , | public static GrayF32 denseDisparitySubpixel( GrayU8 rectLeft, GrayU8 rectRight, | ||

int regionSize, | |||

int disparityMin, int disparityRange ) { | |||

// A slower but more accuracy algorithm is selected | // A slower but more accuracy algorithm is selected | ||

// All of these parameters should be turned | // All of these parameters should be turned | ||

var config = new ConfigDisparityBMBest5(); | |||

config.errorType = DisparityError.CENSUS; | config.errorType = DisparityError.CENSUS; | ||

config.disparityMin = | config.disparityMin = disparityMin; | ||

config.disparityRange = | config.disparityRange = disparityRange; | ||

config.subpixel = true; | config.subpixel = true; | ||

config.regionRadiusX = config.regionRadiusY = regionSize; | config.regionRadiusX = config.regionRadiusY = regionSize; | ||

config.maxPerPixelError = 35; | config.maxPerPixelError = 35; | ||

config.validateRtoL = 1; | config.validateRtoL = 1; | ||

config.texture = 0. | config.texture = 0.05; | ||

StereoDisparity<GrayU8, GrayF32> disparityAlg = | StereoDisparity<GrayU8, GrayF32> disparityAlg = | ||

FactoryStereoDisparity.blockMatchBest5(config, GrayU8.class, GrayF32.class); | FactoryStereoDisparity.blockMatchBest5(config, GrayU8.class, GrayF32.class); | ||

// process and return the results | // process and return the results | ||

disparityAlg.process(rectLeft,rectRight); | disparityAlg.process(rectLeft, rectRight); | ||

return disparityAlg.getDisparity(); | return disparityAlg.getDisparity(); | ||

| Line 105: | Line 107: | ||

* Rectified the input images using known calibration. | * Rectified the input images using known calibration. | ||

*/ | */ | ||

public static RectifyCalibrated rectify(GrayU8 origLeft , GrayU8 origRight , | public static RectifyCalibrated rectify( GrayU8 origLeft, GrayU8 origRight, | ||

StereoParameters param, | |||

GrayU8 rectLeft, GrayU8 rectRight ) { | |||

// Compute rectification | // Compute rectification | ||

RectifyCalibrated rectifyAlg = RectifyImageOps.createCalibrated(); | RectifyCalibrated rectifyAlg = RectifyImageOps.createCalibrated(); | ||

| Line 117: | Line 118: | ||

DMatrixRMaj K2 = PerspectiveOps.pinholeToMatrix(param.getRight(), (DMatrixRMaj)null); | DMatrixRMaj K2 = PerspectiveOps.pinholeToMatrix(param.getRight(), (DMatrixRMaj)null); | ||

rectifyAlg.process(K1,new Se3_F64(),K2,leftToRight); | rectifyAlg.process(K1, new Se3_F64(), K2, leftToRight); | ||

// rectification matrix for each image | // rectification matrix for each image | ||

DMatrixRMaj rect1 = rectifyAlg. | DMatrixRMaj rect1 = rectifyAlg.getUndistToRectPixels1(); | ||

DMatrixRMaj rect2 = rectifyAlg. | DMatrixRMaj rect2 = rectifyAlg.getUndistToRectPixels2(); | ||

// New calibration matrix, | // New calibration matrix, | ||

DMatrixRMaj rectK = rectifyAlg.getCalibrationMatrix(); | DMatrixRMaj rectK = rectifyAlg.getCalibrationMatrix(); | ||

// Adjust the rectification to make the view area more useful | // Adjust the rectification to make the view area more useful | ||

RectifyImageOps.allInsideLeft(param.left | RectifyImageOps.allInsideLeft(param.left, rect1, rect2, rectK, null); | ||

// undistorted and rectify images | // undistorted and rectify images | ||

var rect1_F32 = new FMatrixRMaj(3, 3); | |||

var rect2_F32 = new FMatrixRMaj(3, 3); | |||

ConvertMatrixData.convert(rect1, rect1_F32); | ConvertMatrixData.convert(rect1, rect1_F32); | ||

ConvertMatrixData.convert(rect2, rect2_F32); | ConvertMatrixData.convert(rect2, rect2_F32); | ||

ImageDistort<GrayU8,GrayU8> imageDistortLeft = | ImageDistort<GrayU8, GrayU8> imageDistortLeft = | ||

RectifyDistortImageOps.rectifyImage(param.getLeft(), rect1_F32, BorderType.SKIP, origLeft.getImageType()); | |||

ImageDistort<GrayU8,GrayU8> imageDistortRight = | ImageDistort<GrayU8, GrayU8> imageDistortRight = | ||

RectifyDistortImageOps.rectifyImage(param.getRight(), rect2_F32, BorderType.SKIP, origRight.getImageType()); | |||

imageDistortLeft.apply(origLeft, rectLeft); | imageDistortLeft.apply(origLeft, rectLeft); | ||

| Line 145: | Line 146: | ||

} | } | ||

public static void main( String | public static void main( String[] args ) { | ||

String calibDir = UtilIO.pathExample("calibration/stereo/Bumblebee2_Chess/"); | String calibDir = UtilIO.pathExample("calibration/stereo/Bumblebee2_Chess/"); | ||

String imageDir = UtilIO.pathExample("stereo/"); | String imageDir = UtilIO.pathExample("stereo/"); | ||

StereoParameters param = CalibrationIO.load(new File(calibDir , "stereo.yaml")); | StereoParameters param = CalibrationIO.load(new File(calibDir, "stereo.yaml")); | ||

// load and convert images into a BoofCV format | // load and convert images into a BoofCV format | ||

BufferedImage origLeft = UtilImageIO.loadImage(imageDir , "chair01_left.jpg"); | BufferedImage origLeft = UtilImageIO.loadImage(imageDir, "chair01_left.jpg"); | ||

BufferedImage origRight = UtilImageIO.loadImage(imageDir , "chair01_right.jpg"); | BufferedImage origRight = UtilImageIO.loadImage(imageDir, "chair01_right.jpg"); | ||

GrayU8 distLeft = ConvertBufferedImage.convertFrom(origLeft,(GrayU8)null); | GrayU8 distLeft = ConvertBufferedImage.convertFrom(origLeft, (GrayU8)null); | ||

GrayU8 distRight = ConvertBufferedImage.convertFrom(origRight,(GrayU8)null); | GrayU8 distRight = ConvertBufferedImage.convertFrom(origRight, (GrayU8)null); | ||

// rectify images | // rectify images | ||

| Line 162: | Line 163: | ||

GrayU8 rectRight = distRight.createSameShape(); | GrayU8 rectRight = distRight.createSameShape(); | ||

rectify(distLeft,distRight,param,rectLeft,rectRight); | rectify(distLeft, distRight, param, rectLeft, rectRight); | ||

// compute disparity | // compute disparity | ||

GrayU8 disparity = denseDisparity(rectLeft,rectRight,5,10, | int disparityRange = 60; | ||

// GrayF32 disparity = denseDisparitySubpixel(rectLeft,rectRight,5,10, | GrayU8 disparity = denseDisparity(rectLeft, rectRight, 5, 10, disparityRange); | ||

// GrayF32 disparity = denseDisparitySubpixel(rectLeft, rectRight, 5, 10, disparityRange); | |||

// show results | // show results | ||

BufferedImage visualized = VisualizeImageData.disparity(disparity, null, | BufferedImage visualized = VisualizeImageData.disparity(disparity, null, disparityRange, 0); | ||

var gui = new ListDisplayPanel(); | |||

gui.addImage(rectLeft, "Rectified"); | gui.addImage(rectLeft, "Rectified"); | ||

gui.addImage(visualized, "Disparity"); | gui.addImage(visualized, "Disparity"); | ||

ShowImages.showWindow(gui,"Stereo Disparity", true); | ShowImages.showWindow(gui, "Stereo Disparity", true); | ||

} | } | ||

} | } | ||

</syntaxhighlight> | </syntaxhighlight> | ||

Latest revision as of 18:03, 2 September 2022

Shows how to compute dense disparity between two rectified stereo images. BoofCV provides two different rectangular region based algorithms and noise reduction techniques targeted at real-time processing. Stereo vision can be difficult to get right, so please read all JavaDoc and cited papers. Dense stereo disparity is a computationally expensive and is likely to require a reduction in image size to achieve truly real-time performance.

For visualization purposes the disparity is encoded using a color histogram. Hotter colors indicate closer objects while cooler objects indicate objects that are farther away. Cameras must be accurate calibrated or else an error of a few pixels will drastically degrade performance. A common preprocessing step is to run a Laplacian of Gaussian edge detector across the image to provide invariance to lighting conditions. This was not done below because the cameras have their gain synchronized.

Example File: ExampleStereoDisparity.java

Concepts:

- Stereo Vision

- Disparity

- Rectification

Related Examples:

- Rectifying Calibrated Cameras

- Stereo from Single Camera Example

- Disparity to Mesh

- Disparity to Point Cloud

Related Videos

Example Code

/**

* The disparity between two stereo images is used to estimate the range of objects inside

* the camera's view. Disparity is the difference in position between the viewed location

* of a point in the left and right stereo images. Because input images are rectified,

* corresponding points can be found by only searching along image rows.

*

* Values in the disparity image specify how different the two images are. A value of X indicates

* that the corresponding point in the right image from the left is at "x' = x - X - minDisparity",

* where x' and x are the locations in the right and left images respectively. An invalid value

* with no correspondence is set to a value more than (max - min) disparity.

*

* @author Peter Abeles

*/

public class ExampleStereoDisparity {

/**

* Computes the dense disparity between between two stereo images. The input images

* must be rectified with lens distortion removed to work! Floating point images

* are also supported.

*

* @param rectLeft Rectified left camera image

* @param rectRight Rectified right camera image

* @param regionSize Radius of region being matched

* @param disparityMin Minimum disparity that is considered

* @param disparityRange Number of disparity values considered.

* @return Disparity image

*/

public static GrayU8 denseDisparity( GrayU8 rectLeft, GrayU8 rectRight,

int regionSize,

int disparityMin, int disparityRange ) {

// A slower but more accuracy algorithm is selected

// All of these parameters should be turned

var config = new ConfigDisparityBMBest5();

config.errorType = DisparityError.CENSUS;

config.disparityMin = disparityMin;

config.disparityRange = disparityRange;

config.subpixel = false;

config.regionRadiusX = config.regionRadiusY = regionSize;

config.maxPerPixelError = 35;

config.validateRtoL = 1;

config.texture = 0.2;

StereoDisparity<GrayU8, GrayU8> disparityAlg =

FactoryStereoDisparity.blockMatchBest5(config, GrayU8.class, GrayU8.class);

// process and return the results

disparityAlg.process(rectLeft, rectRight);

return disparityAlg.getDisparity();

}

/**

* Same as above, but compute disparity to within sub-pixel accuracy. The difference between the

* two is more apparent when a 3D point cloud is computed.

*/

public static GrayF32 denseDisparitySubpixel( GrayU8 rectLeft, GrayU8 rectRight,

int regionSize,

int disparityMin, int disparityRange ) {

// A slower but more accuracy algorithm is selected

// All of these parameters should be turned

var config = new ConfigDisparityBMBest5();

config.errorType = DisparityError.CENSUS;

config.disparityMin = disparityMin;

config.disparityRange = disparityRange;

config.subpixel = true;

config.regionRadiusX = config.regionRadiusY = regionSize;

config.maxPerPixelError = 35;

config.validateRtoL = 1;

config.texture = 0.05;

StereoDisparity<GrayU8, GrayF32> disparityAlg =

FactoryStereoDisparity.blockMatchBest5(config, GrayU8.class, GrayF32.class);

// process and return the results

disparityAlg.process(rectLeft, rectRight);

return disparityAlg.getDisparity();

}

/**

* Rectified the input images using known calibration.

*/

public static RectifyCalibrated rectify( GrayU8 origLeft, GrayU8 origRight,

StereoParameters param,

GrayU8 rectLeft, GrayU8 rectRight ) {

// Compute rectification

RectifyCalibrated rectifyAlg = RectifyImageOps.createCalibrated();

Se3_F64 leftToRight = param.getRightToLeft().invert(null);

// original camera calibration matrices

DMatrixRMaj K1 = PerspectiveOps.pinholeToMatrix(param.getLeft(), (DMatrixRMaj)null);

DMatrixRMaj K2 = PerspectiveOps.pinholeToMatrix(param.getRight(), (DMatrixRMaj)null);

rectifyAlg.process(K1, new Se3_F64(), K2, leftToRight);

// rectification matrix for each image

DMatrixRMaj rect1 = rectifyAlg.getUndistToRectPixels1();

DMatrixRMaj rect2 = rectifyAlg.getUndistToRectPixels2();

// New calibration matrix,

DMatrixRMaj rectK = rectifyAlg.getCalibrationMatrix();

// Adjust the rectification to make the view area more useful

RectifyImageOps.allInsideLeft(param.left, rect1, rect2, rectK, null);

// undistorted and rectify images

var rect1_F32 = new FMatrixRMaj(3, 3);

var rect2_F32 = new FMatrixRMaj(3, 3);

ConvertMatrixData.convert(rect1, rect1_F32);

ConvertMatrixData.convert(rect2, rect2_F32);

ImageDistort<GrayU8, GrayU8> imageDistortLeft =

RectifyDistortImageOps.rectifyImage(param.getLeft(), rect1_F32, BorderType.SKIP, origLeft.getImageType());

ImageDistort<GrayU8, GrayU8> imageDistortRight =

RectifyDistortImageOps.rectifyImage(param.getRight(), rect2_F32, BorderType.SKIP, origRight.getImageType());

imageDistortLeft.apply(origLeft, rectLeft);

imageDistortRight.apply(origRight, rectRight);

return rectifyAlg;

}

public static void main( String[] args ) {

String calibDir = UtilIO.pathExample("calibration/stereo/Bumblebee2_Chess/");

String imageDir = UtilIO.pathExample("stereo/");

StereoParameters param = CalibrationIO.load(new File(calibDir, "stereo.yaml"));

// load and convert images into a BoofCV format

BufferedImage origLeft = UtilImageIO.loadImage(imageDir, "chair01_left.jpg");

BufferedImage origRight = UtilImageIO.loadImage(imageDir, "chair01_right.jpg");

GrayU8 distLeft = ConvertBufferedImage.convertFrom(origLeft, (GrayU8)null);

GrayU8 distRight = ConvertBufferedImage.convertFrom(origRight, (GrayU8)null);

// rectify images

GrayU8 rectLeft = distLeft.createSameShape();

GrayU8 rectRight = distRight.createSameShape();

rectify(distLeft, distRight, param, rectLeft, rectRight);

// compute disparity

int disparityRange = 60;

GrayU8 disparity = denseDisparity(rectLeft, rectRight, 5, 10, disparityRange);

// GrayF32 disparity = denseDisparitySubpixel(rectLeft, rectRight, 5, 10, disparityRange);

// show results

BufferedImage visualized = VisualizeImageData.disparity(disparity, null, disparityRange, 0);

var gui = new ListDisplayPanel();

gui.addImage(rectLeft, "Rectified");

gui.addImage(visualized, "Disparity");

ShowImages.showWindow(gui, "Stereo Disparity", true);

}

}