Difference between revisions of "Example Image Stitching"

m |

|||

| Line 9: | Line 9: | ||

</center> | </center> | ||

Image stitching refers to combining two or more overlapping images together into a single large image. The goal is to find transforms which minimize the error in overlapping regions and provide a smooth transition between images. | Image stitching refers to combining two or more overlapping images together into a single large image. The goal is to find transforms which minimize the error in overlapping regions and provide a smooth transition between images. Below is an example of point feature based image stitching. | ||

Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0. | Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0.12/examples/src/boofcv/examples/ExampleImageStitching.java ExampleImageStitching.java] | ||

Concepts: | Concepts: | ||

| Line 31: | Line 31: | ||

Described at a high level this image stitching algorithm can be summarized as follows: | Described at a high level this image stitching algorithm can be summarized as follows: | ||

# Detect | # Detect and describe point features | ||

# Associate features together | # Associate features together | ||

# Robust fitting to find transform | # Robust fitting to find transform | ||

| Line 50: | Line 49: | ||

public static<T extends ImageSingleBand, FD extends TupleDesc> Homography2D_F64 | public static<T extends ImageSingleBand, FD extends TupleDesc> Homography2D_F64 | ||

computeTransform( T imageA , T imageB , | computeTransform( T imageA , T imageB , | ||

DetectDescribePoint<T,FD> detDesc , | |||

GeneralAssociation<FD> associate , | GeneralAssociation<FD> associate , | ||

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher ) | ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher ) | ||

{ | { | ||

// get the length of the description | // get the length of the description | ||

List<Point2D_F64> pointsA = new ArrayList<Point2D_F64>(); | List<Point2D_F64> pointsA = new ArrayList<Point2D_F64>(); | ||

FastQueue<FD> descA = | FastQueue<FD> descA = UtilFeature.createQueue(detDesc,100); | ||

List<Point2D_F64> pointsB = new ArrayList<Point2D_F64>(); | List<Point2D_F64> pointsB = new ArrayList<Point2D_F64>(); | ||

FastQueue<FD> descB = | FastQueue<FD> descB = UtilFeature.createQueue(detDesc,100); | ||

// extract feature locations and descriptions from each image | // extract feature locations and descriptions from each image | ||

describeImage(imageA, | describeImage(imageA, detDesc, pointsA, descA); | ||

describeImage(imageB, | describeImage(imageB, detDesc, pointsB, descB); | ||

// Associate features between the two images | // Associate features between the two images | ||

associate.associate( | associate.setSource(descA); | ||

associate.setDestination(descB); | |||

associate.associate(); | |||

// create a list of AssociatedPairs that tell the model matcher how a feature moved | // create a list of AssociatedPairs that tell the model matcher how a feature moved | ||

| Line 97: | Line 91: | ||

In the function below, a description of each image is computed by finding interest points and their descriptions. | |||

<syntaxhighlight lang="java"> | <syntaxhighlight lang="java"> | ||

| Line 105: | Line 99: | ||

private static <T extends ImageSingleBand, FD extends TupleDesc> | private static <T extends ImageSingleBand, FD extends TupleDesc> | ||

void describeImage(T image, | void describeImage(T image, | ||

DetectDescribePoint<T,FD> detDesc, | |||

List<Point2D_F64> points, | List<Point2D_F64> points, | ||

FastQueue<FD> listDescs) { | FastQueue<FD> listDescs) { | ||

detDesc.detect(image); | |||

listDescs.reset(); | listDescs.reset(); | ||

for( int i = 0; i < | for( int i = 0; i < detDesc.getNumberOfFeatures(); i++ ) { | ||

points.add( detDesc.getLocation(i).copy() ); | |||

listDescs.grow().setTo(detDesc.getDescriptor(i)); | |||

} | } | ||

} | } | ||

| Line 130: | Line 114: | ||

= Declaration of Specific Algorithms = | = Declaration of Specific Algorithms = | ||

In the previous section abstracted code was used to detect and associate features. In this function the specific algorithms which are passed in are defined. This is also where one could change the type of | In the previous section abstracted code was used to detect and associate features. In this function the specific algorithms which are passed in are defined. This is also where one could change the type of feature used to see how that affects performance. | ||

A [http://en.wikipedia.org/wiki/Homography homography] is used to describe the transform between the images. Homographies assume that all features lie on a plane. While this might sound overly restrictive it is a good model when dealing with objects that are far away or when rotating. While a homography should be a good fit for these images, this model does not take in account lens distortion and other physical affects which introduces some artifacts. | A [http://en.wikipedia.org/wiki/Homography homography] is used to describe the transform between the images. Homographies assume that all features lie on a plane. While this might sound overly restrictive it is a good model when dealing with objects that are far away or when rotating. While a homography should be a good fit for these images, this model does not take in account lens distortion and other physical affects which introduces some artifacts. | ||

| Line 146: | Line 130: | ||

// Detect using the standard SURF feature descriptor and describer | // Detect using the standard SURF feature descriptor and describer | ||

DetectDescribePoint detDesc = FactoryDetectDescribe.surf(1, 2, 200, 1, 9, 4, 4, true, imageType); | |||

ScoreAssociation<SurfFeature> scorer = FactoryAssociation.scoreEuclidean(SurfFeature.class,true); | ScoreAssociation<SurfFeature> scorer = FactoryAssociation.scoreEuclidean(SurfFeature.class,true); | ||

GeneralAssociation<SurfFeature> associate = FactoryAssociation.greedy(scorer,2,-1,true); | GeneralAssociation<SurfFeature> associate = FactoryAssociation.greedy(scorer,2,-1,true); | ||

| Line 158: | Line 141: | ||

new Ransac<Homography2D_F64,AssociatedPair>(123,modelFitter,distance,60,9); | new Ransac<Homography2D_F64,AssociatedPair>(123,modelFitter,distance,60,9); | ||

Homography2D_F64 H = computeTransform(inputA, inputB, | Homography2D_F64 H = computeTransform(inputA, inputB, detDesc, associate, modelMatcher); | ||

// draw the results | // draw the results | ||

Revision as of 05:50, 5 December 2012

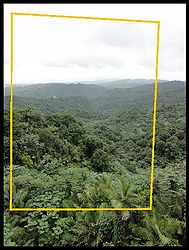

Image Stitching Example

- Images stitched together using example code

Image stitching refers to combining two or more overlapping images together into a single large image. The goal is to find transforms which minimize the error in overlapping regions and provide a smooth transition between images. Below is an example of point feature based image stitching.

Example File: ExampleImageStitching.java

Concepts:

- Interest point detection

- Region descriptions

- Feature association

- Robust model fitting

- Homography

Relevant Applets:

Algorithm Introduction

Described at a high level this image stitching algorithm can be summarized as follows:

- Detect and describe point features

- Associate features together

- Robust fitting to find transform

- Render combined image

The core algorithm has been coded up using abstracted code which allows different models and algorithms to be changed easily. Output examples are shown at the top of this page.

Abstracted Code

The code algorithm summarized in the previous section is now shown in code. Java generics are used to abstract away the input image type as well as the type of models being used to describe the image motion. Checks are done to make sure compatible detector and describes have been provided.

/**

* Using abstracted code, find a transform which minimizes the difference between corresponding features

* in both images. This code is completely model independent and is the core algorithms.

*/

public static<T extends ImageSingleBand, FD extends TupleDesc> Homography2D_F64

computeTransform( T imageA , T imageB ,

DetectDescribePoint<T,FD> detDesc ,

GeneralAssociation<FD> associate ,

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher )

{

// get the length of the description

List<Point2D_F64> pointsA = new ArrayList<Point2D_F64>();

FastQueue<FD> descA = UtilFeature.createQueue(detDesc,100);

List<Point2D_F64> pointsB = new ArrayList<Point2D_F64>();

FastQueue<FD> descB = UtilFeature.createQueue(detDesc,100);

// extract feature locations and descriptions from each image

describeImage(imageA, detDesc, pointsA, descA);

describeImage(imageB, detDesc, pointsB, descB);

// Associate features between the two images

associate.setSource(descA);

associate.setDestination(descB);

associate.associate();

// create a list of AssociatedPairs that tell the model matcher how a feature moved

FastQueue<AssociatedIndex> matches = associate.getMatches();

List<AssociatedPair> pairs = new ArrayList<AssociatedPair>();

for( int i = 0; i < matches.size(); i++ ) {

AssociatedIndex match = matches.get(i);

Point2D_F64 a = pointsA.get(match.src);

Point2D_F64 b = pointsB.get(match.dst);

pairs.add( new AssociatedPair(a,b,false));

}

// find the best fit model to describe the change between these images

if( !modelMatcher.process(pairs) )

throw new RuntimeException("Model Matcher failed!");

// return the found image transform

return modelMatcher.getModel();

}

In the function below, a description of each image is computed by finding interest points and their descriptions.

/**

* Detects features inside the two images and computes descriptions at those points.

*/

private static <T extends ImageSingleBand, FD extends TupleDesc>

void describeImage(T image,

DetectDescribePoint<T,FD> detDesc,

List<Point2D_F64> points,

FastQueue<FD> listDescs) {

detDesc.detect(image);

listDescs.reset();

for( int i = 0; i < detDesc.getNumberOfFeatures(); i++ ) {

points.add( detDesc.getLocation(i).copy() );

listDescs.grow().setTo(detDesc.getDescriptor(i));

}

}

Declaration of Specific Algorithms

In the previous section abstracted code was used to detect and associate features. In this function the specific algorithms which are passed in are defined. This is also where one could change the type of feature used to see how that affects performance.

A homography is used to describe the transform between the images. Homographies assume that all features lie on a plane. While this might sound overly restrictive it is a good model when dealing with objects that are far away or when rotating. While a homography should be a good fit for these images, this model does not take in account lens distortion and other physical affects which introduces some artifacts.

/**

* Given two input images create and display an image where the two have been overlayed on top of each other.

*/

public static <T extends ImageSingleBand>

void stitch( BufferedImage imageA , BufferedImage imageB , Class<T> imageType )

{

T inputA = ConvertBufferedImage.convertFromSingle(imageA, null, imageType);

T inputB = ConvertBufferedImage.convertFromSingle(imageB, null, imageType);

// Detect using the standard SURF feature descriptor and describer

DetectDescribePoint detDesc = FactoryDetectDescribe.surf(1, 2, 200, 1, 9, 4, 4, true, imageType);

ScoreAssociation<SurfFeature> scorer = FactoryAssociation.scoreEuclidean(SurfFeature.class,true);

GeneralAssociation<SurfFeature> associate = FactoryAssociation.greedy(scorer,2,-1,true);

// fit the images using a homography. This works well for rotations and distant objects.

GenerateHomographyLinear modelFitter = new GenerateHomographyLinear(true);

DistanceHomographySq distance = new DistanceHomographySq();

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher =

new Ransac<Homography2D_F64,AssociatedPair>(123,modelFitter,distance,60,9);

Homography2D_F64 H = computeTransform(inputA, inputB, detDesc, associate, modelMatcher);

// draw the results

HomographyStitchPanel panel = new HomographyStitchPanel(0.5,inputA.width,inputA.height);

panel.configure(imageA,imageB,H);

ShowImages.showWindow(panel,"Stitched Images");

}