Difference between revisions of "Performance:OpenCV:BoofCV:2011"

m (Created page with "= Relative Speed of BoofCV and OpenCV = A key element in real-time computer vision is how fast the computer vision library is. Common wisdom dictates that [http://opencv.willow...") |

m |

||

| Line 2: | Line 2: | ||

A key element in real-time computer vision is how fast the computer vision library is. Common wisdom dictates that [http://opencv.willowgarage.com/wiki/ OpenCV] should crush BoofCV as far as speed is concerned because OpenCV is written in C/C++ and has been develop/optimized since 1999, while BoofCV is written in Java and started development the summer of 2011. It is true that C/C++ is faster than Java, but just being written in C/C++ does not make an implementation fast. | A key element in real-time computer vision is how fast the computer vision library is. Common wisdom dictates that [http://opencv.willowgarage.com/wiki/ OpenCV] should crush BoofCV as far as speed is concerned because OpenCV is written in C/C++ and has been develop/optimized since 1999, while BoofCV is written in Java and started development the summer of 2011. It is true that C/C++ is faster than Java, but just being written in C/C++ does not make an implementation fast. | ||

Tested Operations | Tested Operations | ||

| Line 17: | Line 11: | ||

# SURF detect and described. | # SURF detect and described. | ||

= Results = | Comparing low level algorithms was much easier than high level algorithms. Typically low level image processing algorithms don't require any parameters and have more standardized implementations. High level algorithms are open to more interpretation and it was often the case that one library would have configuration parameters not available to the other. For high level algorithms the parameters were tuned such that they produced similar output. It should be noted only speed is consider and not quality, with the exception of SURF performance. A more detailed study of SURF is available at the [[Performance:SURF|SURF performance page]]. For example, one library might be much faster than another, but is able to achieve that speed by producing low quality output. | ||

In other words, '''TAKE THIS STUDY WITH A GRAIN OF SALT'''. | |||

= Performance Results = | |||

<center> | <center> | ||

{| class="wikitable" style="text-align: center; width: 400px; height: 300px;" | {| class="wikitable" style="text-align: center; width: 400px; height: 300px;" | ||

| Line 36: | Line 34: | ||

If both libraries had equivalent parameters then the same values would be used. Otherwise parameters would be hand tuned until the produced similar results. For feature extraction algorithms this was defined as producing the same number of features. | If both libraries had equivalent parameters then the same values would be used. Otherwise parameters would be hand tuned until the produced similar results. For feature extraction algorithms this was defined as producing the same number of features. | ||

Source Code: | Test Source Code: | ||

# [https://github.com/lessthanoptimal/BoofCV/blob/master/evaluation/benchmark/src/boofcv/benchmark/opencv/BenchmarkForOpenCV.java BoofCV] | # [https://github.com/lessthanoptimal/BoofCV/blob/master/evaluation/benchmark/src/boofcv/benchmark/opencv/BenchmarkForOpenCV.java BoofCV] | ||

# [https://github.com/lessthanoptimal/BoofCV/tree/master/evaluation/misc/ip/opencv OpenCV] | # [https://github.com/lessthanoptimal/BoofCV/tree/master/evaluation/misc/ip/opencv OpenCV] | ||

Testing Procedure: | Testing Procedure: | ||

Revision as of 13:23, 1 November 2011

Relative Speed of BoofCV and OpenCV

A key element in real-time computer vision is how fast the computer vision library is. Common wisdom dictates that OpenCV should crush BoofCV as far as speed is concerned because OpenCV is written in C/C++ and has been develop/optimized since 1999, while BoofCV is written in Java and started development the summer of 2011. It is true that C/C++ is faster than Java, but just being written in C/C++ does not make an implementation fast.

Tested Operations

- Gaussian blur with a 5x5 kernel

- Sobel gradient with a 3x3 kernel

- Harris Corner detector with 5x5 non-max suppression

- Canny with basic contour extraction.

- Hough Line detection using polar coordinates.

- SURF detect and described.

Comparing low level algorithms was much easier than high level algorithms. Typically low level image processing algorithms don't require any parameters and have more standardized implementations. High level algorithms are open to more interpretation and it was often the case that one library would have configuration parameters not available to the other. For high level algorithms the parameters were tuned such that they produced similar output. It should be noted only speed is consider and not quality, with the exception of SURF performance. A more detailed study of SURF is available at the SURF performance page. For example, one library might be much faster than another, but is able to achieve that speed by producing low quality output.

In other words, TAKE THIS STUDY WITH A GRAIN OF SALT.

Performance Results

|

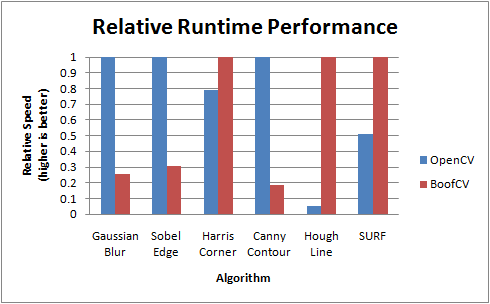

| OpenCV does better on low level operations, while BoofCV does better on most of the high level operations. Higher bars are better. |

As expected, OpenCV performed very well for low level image processing routines. These routines can be highly optimized and take advantage of machine specific vectorization operation. Java takes a significant speed hit when traversing through arrays because for each operation it must perform a bounds check, which is not required in C/C++. Also when declaring new arrays every element is always initialized to zero, even if this is not needed. Past experience has shown that java tends to be about 3 times slower in array heavy arithmetic, which these results confirm.

For high level algorithms the language in which it was implemented seems to matter less. What is most likely the biggest determining factor is the algorithm implemented and how well it has been implemented. These implementation difference can explain BoofCV's better performance in many of the operations despite its language disadvantage.

Test Setup

For all operations, as many iterations were performed for at least one second and the number of operations per second (ops/sec)computed. To avoid degrading results for very fast operations, the number of operations needed to run for at least one second was automatically computed. For display purposes the ops/sec metric was converted into a relative performance metric by dividing it by the best performing library.

If both libraries had equivalent parameters then the same values would be used. Otherwise parameters would be hand tuned until the produced similar results. For feature extraction algorithms this was defined as producing the same number of features.

Test Source Code:

Testing Procedure:

- Kill all extraneous processes.

- Run benchmark application for each library

- For BoofCV each test was run one at a time to improve stability. See [Matrix benchmark] for a discussion of this issue.

- As expected, no improvement found for OpenCV code.

- Run the whole experiment 5 times for each library and record the best performance.

Test Computer:

- Ubuntu 10.10 64bit

- Quadcore Q6600 2.4 GHz

- Memory 8194 GB

- g++ 4.4.5

- Java(TM) SE Runtime Environment (build 1.6.0_26-b03)

Compiler and JRE Configuration:

- All native libraries were compiled with -O3

- Java applications were run with no special flags

Library Version

- BoofCV previous release 10/2011

- OpenCV 2.3.1 SVN r6879