Difference between revisions of "Example Image Stitching"

(Updated for v0.16) |

|||

| Line 9: | Line 9: | ||

This example is designed to demonstrate several aspects of BoofCV by stitching images together. Image stitching refers to combining two or more overlapping images together into a single large image. When stitching images together the goal is to find a 2D geometric transform which minimize the error (difference in appearance) in overlapping regions. There are many ways to do this, in the example below point image features are found, associated, and then a 2D transform is found robustly using the associated features. | This example is designed to demonstrate several aspects of BoofCV by stitching images together. Image stitching refers to combining two or more overlapping images together into a single large image. When stitching images together the goal is to find a 2D geometric transform which minimize the error (difference in appearance) in overlapping regions. There are many ways to do this, in the example below point image features are found, associated, and then a 2D transform is found robustly using the associated features. | ||

Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0. | Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0.16/examples/src/boofcv/examples/geometry/ExampleImageStitching.java ExampleImageStitching.java] | ||

Concepts: | Concepts: | ||

| Line 87: | Line 87: | ||

// return the found image transform | // return the found image transform | ||

return modelMatcher. | return modelMatcher.getModelParameters().copy(); | ||

} | } | ||

| Line 123: | Line 123: | ||

// fit the images using a homography. This works well for rotations and distant objects. | // fit the images using a homography. This works well for rotations and distant objects. | ||

ModelManager<Homography2D_F64> manager = new ModelManagerHomography2D_F64(); | |||

GenerateHomographyLinear modelFitter = new GenerateHomographyLinear(true); | GenerateHomographyLinear modelFitter = new GenerateHomographyLinear(true); | ||

DistanceHomographySq distance = new DistanceHomographySq(); | DistanceHomographySq distance = new DistanceHomographySq(); | ||

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher = | ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher = | ||

new Ransac<Homography2D_F64,AssociatedPair>(123,modelFitter,distance,60,9); | new Ransac<Homography2D_F64,AssociatedPair>(123,manager,modelFitter,distance,60,9); | ||

Homography2D_F64 H = computeTransform(inputA, inputB, detDesc, associate, modelMatcher); | Homography2D_F64 H = computeTransform(inputA, inputB, detDesc, associate, modelMatcher); | ||

| Line 146: | Line 147: | ||

// Convert into a BoofCV color format | // Convert into a BoofCV color format | ||

MultiSpectral<ImageFloat32> colorA = ConvertBufferedImage.convertFromMulti(imageA, null, ImageFloat32.class); | MultiSpectral<ImageFloat32> colorA = | ||

MultiSpectral<ImageFloat32> colorB = ConvertBufferedImage.convertFromMulti(imageB, null, ImageFloat32.class); | ConvertBufferedImage.convertFromMulti(imageA, null,true, ImageFloat32.class); | ||

MultiSpectral<ImageFloat32> colorB = | |||

ConvertBufferedImage.convertFromMulti(imageB, null,true, ImageFloat32.class); | |||

// Where the output images are rendered into | // Where the output images are rendered into | ||

| Line 172: | Line 175: | ||

// Convert the rendered image into a BufferedImage | // Convert the rendered image into a BufferedImage | ||

BufferedImage output = new BufferedImage(work.width,work.height,imageA.getType()); | BufferedImage output = new BufferedImage(work.width,work.height,imageA.getType()); | ||

ConvertBufferedImage.convertTo(work,output); | ConvertBufferedImage.convertTo(work,output,true); | ||

Graphics2D g2 = output.createGraphics(); | Graphics2D g2 = output.createGraphics(); | ||

Revision as of 15:36, 25 December 2013

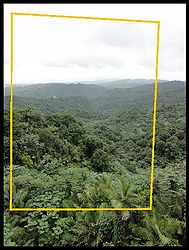

- Images stitched together using example code

This example is designed to demonstrate several aspects of BoofCV by stitching images together. Image stitching refers to combining two or more overlapping images together into a single large image. When stitching images together the goal is to find a 2D geometric transform which minimize the error (difference in appearance) in overlapping regions. There are many ways to do this, in the example below point image features are found, associated, and then a 2D transform is found robustly using the associated features.

Example File: ExampleImageStitching.java

Concepts:

- Interest point detection

- Region descriptions

- Feature association

- Robust model fitting

- Homography

Relevant Applets:

Related Examples:

Algorithm Introduction

Described at a high level this image stitching algorithm can be summarized as follows:

- Detect and describe point features

- Associate features together

- Robust fitting to find transform

- Render combined image

The core algorithm has been coded up using abstracted code which allows different models and algorithms to be changed easily. Output examples are shown at the top of this page.

Example Code

public class ExampleImageStitching {

/**

* Using abstracted code, find a transform which minimizes the difference between corresponding features

* in both images. This code is completely model independent and is the core algorithms.

*/

public static<T extends ImageSingleBand, FD extends TupleDesc> Homography2D_F64

computeTransform( T imageA , T imageB ,

DetectDescribePoint<T,FD> detDesc ,

AssociateDescription<FD> associate ,

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher )

{

// get the length of the description

List<Point2D_F64> pointsA = new ArrayList<Point2D_F64>();

FastQueue<FD> descA = UtilFeature.createQueue(detDesc,100);

List<Point2D_F64> pointsB = new ArrayList<Point2D_F64>();

FastQueue<FD> descB = UtilFeature.createQueue(detDesc,100);

// extract feature locations and descriptions from each image

describeImage(imageA, detDesc, pointsA, descA);

describeImage(imageB, detDesc, pointsB, descB);

// Associate features between the two images

associate.setSource(descA);

associate.setDestination(descB);

associate.associate();

// create a list of AssociatedPairs that tell the model matcher how a feature moved

FastQueue<AssociatedIndex> matches = associate.getMatches();

List<AssociatedPair> pairs = new ArrayList<AssociatedPair>();

for( int i = 0; i < matches.size(); i++ ) {

AssociatedIndex match = matches.get(i);

Point2D_F64 a = pointsA.get(match.src);

Point2D_F64 b = pointsB.get(match.dst);

pairs.add( new AssociatedPair(a,b,false));

}

// find the best fit model to describe the change between these images

if( !modelMatcher.process(pairs) )

throw new RuntimeException("Model Matcher failed!");

// return the found image transform

return modelMatcher.getModelParameters().copy();

}

/**

* Detects features inside the two images and computes descriptions at those points.

*/

private static <T extends ImageSingleBand, FD extends TupleDesc>

void describeImage(T image,

DetectDescribePoint<T,FD> detDesc,

List<Point2D_F64> points,

FastQueue<FD> listDescs) {

detDesc.detect(image);

listDescs.reset();

for( int i = 0; i < detDesc.getNumberOfFeatures(); i++ ) {

points.add( detDesc.getLocation(i).copy() );

listDescs.grow().setTo(detDesc.getDescription(i));

}

}

/**

* Given two input images create and display an image where the two have been overlayed on top of each other.

*/

public static <T extends ImageSingleBand>

void stitch( BufferedImage imageA , BufferedImage imageB , Class<T> imageType )

{

T inputA = ConvertBufferedImage.convertFromSingle(imageA, null, imageType);

T inputB = ConvertBufferedImage.convertFromSingle(imageB, null, imageType);

// Detect using the standard SURF feature descriptor and describer

DetectDescribePoint detDesc = FactoryDetectDescribe.surfStable(

new ConfigFastHessian(1, 2, 200, 1, 9, 4, 4), null,null, imageType);

ScoreAssociation<SurfFeature> scorer = FactoryAssociation.scoreEuclidean(SurfFeature.class,true);

AssociateDescription<SurfFeature> associate = FactoryAssociation.greedy(scorer,2,true);

// fit the images using a homography. This works well for rotations and distant objects.

ModelManager<Homography2D_F64> manager = new ModelManagerHomography2D_F64();

GenerateHomographyLinear modelFitter = new GenerateHomographyLinear(true);

DistanceHomographySq distance = new DistanceHomographySq();

ModelMatcher<Homography2D_F64,AssociatedPair> modelMatcher =

new Ransac<Homography2D_F64,AssociatedPair>(123,manager,modelFitter,distance,60,9);

Homography2D_F64 H = computeTransform(inputA, inputB, detDesc, associate, modelMatcher);

renderStitching(imageA,imageB,H);

}

/**

* Renders and displays the stitched together images

*/

public static void renderStitching( BufferedImage imageA, BufferedImage imageB ,

Homography2D_F64 fromAtoB )

{

// specify size of output image

double scale = 0.5;

int outputWidth = imageA.getWidth();

int outputHeight = imageA.getHeight();

// Convert into a BoofCV color format

MultiSpectral<ImageFloat32> colorA =

ConvertBufferedImage.convertFromMulti(imageA, null,true, ImageFloat32.class);

MultiSpectral<ImageFloat32> colorB =

ConvertBufferedImage.convertFromMulti(imageB, null,true, ImageFloat32.class);

// Where the output images are rendered into

MultiSpectral<ImageFloat32> work = new MultiSpectral<ImageFloat32>(ImageFloat32.class,outputWidth,outputHeight,3);

// Adjust the transform so that the whole image can appear inside of it

Homography2D_F64 fromAToWork = new Homography2D_F64(scale,0,colorA.width/4,0,scale,colorA.height/4,0,0,1);

Homography2D_F64 fromWorkToA = fromAToWork.invert(null);

// Used to render the results onto an image

PixelTransformHomography_F32 model = new PixelTransformHomography_F32();

ImageDistort<MultiSpectral<ImageFloat32>> distort =

DistortSupport.createDistortMS(ImageFloat32.class, model, new ImplBilinearPixel_F32(), null);

// Render first image

model.set(fromWorkToA);

distort.apply(colorA,work);

// Render second image

Homography2D_F64 fromWorkToB = fromWorkToA.concat(fromAtoB,null);

model.set(fromWorkToB);

distort.apply(colorB,work);

// Convert the rendered image into a BufferedImage

BufferedImage output = new BufferedImage(work.width,work.height,imageA.getType());

ConvertBufferedImage.convertTo(work,output,true);

Graphics2D g2 = output.createGraphics();

// draw lines around the distorted image to make it easier to see

Homography2D_F64 fromBtoWork = fromWorkToB.invert(null);

Point2D_I32 corners[] = new Point2D_I32[4];

corners[0] = renderPoint(0,0,fromBtoWork);

corners[1] = renderPoint(colorB.width,0,fromBtoWork);

corners[2] = renderPoint(colorB.width,colorB.height,fromBtoWork);

corners[3] = renderPoint(0,colorB.height,fromBtoWork);

g2.setColor(Color.ORANGE);

g2.setStroke(new BasicStroke(4));

g2.drawLine(corners[0].x,corners[0].y,corners[1].x,corners[1].y);

g2.drawLine(corners[1].x,corners[1].y,corners[2].x,corners[2].y);

g2.drawLine(corners[2].x,corners[2].y,corners[3].x,corners[3].y);

g2.drawLine(corners[3].x,corners[3].y,corners[0].x,corners[0].y);

ShowImages.showWindow(output,"Stitched Images");

}

private static Point2D_I32 renderPoint( int x0 , int y0 , Homography2D_F64 fromBtoWork )

{

Point2D_F64 result = new Point2D_F64();

HomographyPointOps_F64.transform(fromBtoWork, new Point2D_F64(x0, y0), result);

return new Point2D_I32((int)result.x,(int)result.y);

}

public static void main( String args[] ) {

BufferedImage imageA,imageB;

imageA = UtilImageIO.loadImage("../data/evaluation/stitch/mountain_rotate_01.jpg");

imageB = UtilImageIO.loadImage("../data/evaluation/stitch//mountain_rotate_03.jpg");

stitch(imageA,imageB, ImageFloat32.class);

imageA = UtilImageIO.loadImage("../data/evaluation/stitch/kayak_01.jpg");

imageB = UtilImageIO.loadImage("../data/evaluation/stitch/kayak_03.jpg");

stitch(imageA,imageB, ImageFloat32.class);

imageA = UtilImageIO.loadImage("../data/evaluation/scale/rainforest_01.jpg");

imageB = UtilImageIO.loadImage("../data/evaluation/scale/rainforest_02.jpg");

stitch(imageA,imageB, ImageFloat32.class);

}

}