Example Multi Baseline Stereo

From BoofCV

Jump to navigationJump to searchThe printable version is no longer supported and may have rendering errors. Please update your browser bookmarks and please use the default browser print function instead.

|

|

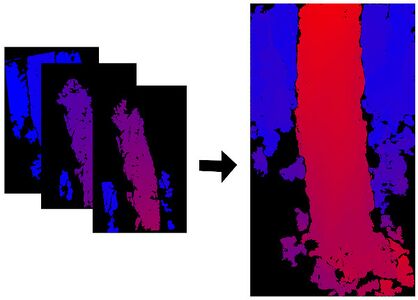

| Multiple disparity images are computed from a common view and combined into a single disparity image. | Video showing resulting point cloud |

The idea behind Multi Baseline Stereo is combine multiple stereo pairs that all share a common view into a single disparity image that is in theory more complete and less noisy. An example of how to do using an already provided algorithm is shown below. This is basically a first pass and doesn't yet have all the more advanced features included. As a warning, this is built on top of the scene reconstruction demo and the first time you run it it might take a couple of minutes as it solves a lot of other problems first.

Example Code:

Concepts:

- Structure from Motion

- Sparse Bundle Adjustment

- Uncalibrated Sparse Reconstruction

- Dense Multiview Reconstruction

Example Code

/**

* Multi Baseline Stereo (MBS) is a problem where you have one common/center image with multiple paired images that have

* different baselines from each other and the goal is to output a single combined stereo image. In BoofCV, this

* combined stereo image will be the original image's pixel as compared to the more standard rectified image. At

* the time of this writing, BoofCV only contains one MBS algorithm, which is just about the simplest one possible

* and works by combining independently computed disparity images together using a median filter to select the output.

*

* @author Peter Abeles

*/

public class ExampleMultiBaselineStereo {

public static void main( String[] args ) {

// Compute a sparse reconstruction. This will give us intrinsic and extrinsic for all views

var example = new ExampleMultiViewSparseReconstruction();

// Specifies the "center" frame to use

int centerViewIdx = 15;

example.compute("tree_snow_01.mp4", true);

// example.compute("ditch_02.mp4", true);

// example.compute("holiday_display_01.mp4", true);

// example.compute("log_building_02.mp4", true);

// example.compute("drone_park_01.mp4", false);

// example.compute("stone_sign.mp4", true);

// We need a way to load images based on their ID. In this particular case the ID encodes the array index.

var imageLookup = new LookUpImageFilesByIndex(example.imageFiles);

// Next we tell it which view to use as the "center", which acts as the common view for all disparity images.

// The process of selecting the best views to use as centers is a problem all it's own. To keep things

// we just pick a frame.

SceneWorkingGraph.View center = example.working.getAllViews().get(centerViewIdx);

// The final scene refined by bundle adjustment is created by the Working graph. However the 3D relationship

// between views is contained in the pairwise graph. A View in the working graph has a reference to the view

// in the pairwise graph. Using that we will find all connected views that have a 3D relationship

var pairedViewIdxs = new DogArray_I32();

var sbaIndexToImageID = new TIntObjectHashMap<String>();

// This relationship between pairwise and working graphs might seem (and is) a bit convoluted. The Pairwise

// graph is the initial crude sketch of what might be connected. The working graph is an intermediate

// data structure for computing the metric scene. SBA is a refinement of the working graph.

// Iterate through all connected views in the pairwise graph and mark their indexes in the working graph

center.pview.connections.forEach(( m ) -> {

// if there isn't a 3D relationship just skip it

if (!m.is3D)

return;

String connectedID = m.other(center.pview).id;

SceneWorkingGraph.View connected = example.working.views.get(connectedID);

// Make sure the pairwise view exists in the working graph too

if (connected == null)

return;

// Add this view to the index to name/ID lookup table

sbaIndexToImageID.put(connected.index, connectedID);

// Note that this view is one which acts as the second image in the stereo pair

pairedViewIdxs.add(connected.index);

});

// Add the center camera image to the ID look up table

sbaIndexToImageID.put(centerViewIdx, center.pview.id);

// Configure there stereo disparity algorithm which is used

var configDisparity = new ConfigDisparityBMBest5();

configDisparity.validateRtoL = 1;

configDisparity.texture = 0.5;

configDisparity.regionRadiusX = configDisparity.regionRadiusY = 4;

configDisparity.disparityRange = 120;

// This is the actual MBS algorithm mentioned previously. It selects the best disparity for each pixel

// in the original image using a median filter.

var multiBaseline = new MultiBaselineStereoIndependent<>(imageLookup, ImageType.SB_U8);

multiBaseline.setStereoDisparity(

FactoryStereoDisparity.blockMatchBest5(configDisparity, GrayU8.class, GrayF32.class));

// Print out verbose debugging and profile information

multiBaseline.setVerbose(System.out, null);

multiBaseline.setVerboseProfiling(System.out);

// Improve stereo by removing small regions, which tends to be noise. Consider adjusting the region size.

multiBaseline.setDisparitySmoother(FactoryStereoDisparity.removeSpeckle(null, GrayF32.class));

// Print out debugging information from the smoother

//Objects.requireNonNull(multiBaseline.getDisparitySmoother()).setVerbose(System.out,null);

// Creates a list where you can switch between different images/visualizations

var listDisplay = new ListDisplayPanel();

listDisplay.setPreferredSize(new Dimension(1000, 300));

ShowImages.showWindow(listDisplay, "Intermediate Results", true);

// We will display intermediate results as they come in

multiBaseline.setListener(( leftView, rightView, rectLeft, rectRight,

disparity, parameters, rect ) -> {

// Visualize the rectified stereo pair. You can interact with this window and verify

// that the y-axis is aligned

var rectified = new RectifiedPairPanel(true);

rectified.setImages(ConvertBufferedImage.convertTo(rectLeft, null),

ConvertBufferedImage.convertTo(rectRight, null));

// Display the colorized disparity

BufferedImage colorized = VisualizeImageData.disparity(disparity, null, parameters.disparityRange, 0);

SwingUtilities.invokeLater(() -> {

listDisplay.addItem(rectified, "Rectified " + leftView + " " + rightView);

listDisplay.addImage(colorized, leftView + " " + rightView);

});

});

// Process the images and compute a single combined disparity image

if (!multiBaseline.process(example.scene, center.index, pairedViewIdxs, sbaIndexToImageID::get)) {

throw new RuntimeException("Failed to fuse stereo views");

}

// Extract the point cloud from the fused disparity image

GrayF32 fusedDisparity = multiBaseline.getFusedInvDepth();

BufferedImage colorizedDisp = VisualizeImageData.inverseDepth(fusedDisparity, null, -1f, 0);

ShowImages.showWindow(colorizedDisp, "Fused InverseDepth");

// Now compute the point cloud it represents and the color of each pixel.

// For the fused image, instead of being in rectified image coordinates it's in the original image coordinates

// this makes extracting color much easier.

var cloud = new DogArray<>(Point3D_F64::new);

var cloudRgb = new DogArray_I32(cloud.size);

// Load the center image in color

var colorImage = new InterleavedU8(1, 1, 3);

imageLookup.loadImage(center.pview.id, colorImage);

// Since the fused image is in the original (i.e. distorted) pixel coordinates and is not rectified,

// that needs to be taken in account by undistorting the image to create the point cloud.

CameraPinholeBrown intrinsic = BundleAdjustmentOps.convert(example.scene.cameras.get(center.cameraIdx).model,

colorImage.width, colorImage.height, null);

Point2Transform2_F64 pixel_to_norm = new LensDistortionBrown(intrinsic).distort_F64(true, false);

MultiViewStereoOps.inverseToCloud(fusedDisparity, new PointToPixelTransform_F64(pixel_to_norm),

( pixX, pixY, x, y, z ) -> {

cloud.grow().setTo(x, y, z);

cloudRgb.add(colorImage.get24(pixX, pixY));

});

// Configure the point cloud viewer

PointCloudViewer pcv = VisualizeData.createPointCloudViewer();

pcv.setCameraHFov(UtilAngle.radian(70));

pcv.setTranslationStep(0.15);

pcv.addCloud(cloud.toList(), cloudRgb.data);

// pcv.setColorizer(new SingleAxisRgb.Z().fperiod(30.0));

JComponent viewer = pcv.getComponent();

viewer.setPreferredSize(new Dimension(600, 600));

ShowImages.showWindow(viewer, "Point Cloud", true);

System.out.println("Done");

}

}