Difference between revisions of "Example Point Cloud Depth Image"

From BoofCV

Jump to navigationJump to search (Added depth point cloud example) |

m |

||

| (12 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

This example demonstrates how to create a 3D point cloud from a RGB-D sensor, such as the Kinect, and visualize it. RGB-D sensors have both visual and depth information. In this example the depth information is stored in a 16-bit image and the visual image in a standard color image. Calibration matching RGB an depth pixels to each other has already been done by the sensor. | This example demonstrates how to create a 3D point cloud from a RGB-D sensor, such as the Kinect, and visualize it. RGB-D sensors have both visual and depth information. In this example the depth information is stored in a 16-bit image and the visual image in a standard color image. Calibration matching RGB an depth pixels to each other has already been done by the sensor. | ||

Example Code: | Example Code: | ||

* [https://github.com/lessthanoptimal/BoofCV/blob/v0. | * [https://github.com/lessthanoptimal/BoofCV/blob/v0.40/examples/src/main/java/boofcv/examples/geometry/ExampleDepthPointCloud.java ExampleDepthPointCloud.java] | ||

Concepts: | Concepts: | ||

* RGB-D | * RGB-D | ||

* Point clouds | * Point clouds | ||

Related Examples: | Related Examples: | ||

| Line 26: | Line 22: | ||

<syntaxhighlight lang="java"> | <syntaxhighlight lang="java"> | ||

/** | /** | ||

* Example of how to create a point cloud from a RGB-D (Kinect) sensor. | * Example of how to create a point cloud from a RGB-D (Kinect) sensor. Data is loaded from two files, one for the | ||

* visual image and one for the depth image. | * visual image and one for the depth image. | ||

* | * | ||

| Line 32: | Line 28: | ||

*/ | */ | ||

public class ExampleDepthPointCloud { | public class ExampleDepthPointCloud { | ||

public static void main( String[] args ) { | |||

String nameRgb = UtilIO.pathExample("kinect/basket/basket_rgb.png"); | |||

String nameDepth = UtilIO.pathExample("kinect/basket/basket_depth.png"); | |||

String nameCalib = UtilIO.pathExample("kinect/basket/visualdepth.yaml"); | |||

VisualDepthParameters param = CalibrationIO.load(nameCalib); | |||

BufferedImage buffered = UtilImageIO.loadImageNotNull(nameRgb); | |||

Planar<GrayU8> rgb = ConvertBufferedImage.convertFromPlanar(buffered, null, true, GrayU8.class); | |||

GrayU16 depth = ConvertBufferedImage.convertFrom(UtilImageIO.loadImageNotNull(nameDepth), null, GrayU16.class); | |||

var cloud = new DogArray<>(Point3D_F64::new); | |||

var cloudColor = new DogArray<>(() -> new int[3]); | |||

VisualDepthOps.depthTo3D(param.visualParam, rgb, depth, cloud, cloudColor); | VisualDepthOps.depthTo3D(param.visualParam, rgb, depth, cloud, cloudColor); | ||

PointCloudViewer viewer = VisualizeData.createPointCloudViewer(); | |||

viewer.setCameraHFov(PerspectiveOps.computeHFov(param.visualParam)); | |||

viewer.setTranslationStep(15); | |||

viewer. | |||

for( int i = 0; i < cloud.size; i++ ) { | for (int i = 0; i < cloud.size; i++) { | ||

Point3D_F64 p = cloud.get(i); | Point3D_F64 p = cloud.get(i); | ||

int[] color = cloudColor.get(i); | int[] color = cloudColor.get(i); | ||

int c = (color[0] << 16 ) | (color[1] << 8) | color[2]; | int c = (color[0] << 16) | (color[1] << 8) | color[2]; | ||

viewer.addPoint(p.x,p.y,p.z,c); | viewer.addPoint(p.x, p.y, p.z, c); | ||

} | } | ||

viewer.getComponent().setPreferredSize(new Dimension(rgb.width, rgb.height)); | |||

// ---------- Display depth image | // ---------- Display depth image | ||

// use the actual max value in the image to maximize its appearance | // use the actual max value in the image to maximize its appearance | ||

int maxValue = ImageStatistics.max(depth); | int maxValue = ImageStatistics.max(depth); | ||

BufferedImage depthOut = VisualizeImageData.disparity(depth, null | BufferedImage depthOut = VisualizeImageData.disparity(depth, null, maxValue, 0); | ||

ShowImages.showWindow(depthOut,"Depth Image"); | ShowImages.showWindow(depthOut, "Depth Image", true); | ||

// ---------- Display colorized point cloud | // ---------- Display colorized point cloud | ||

ShowImages.showWindow(viewer,"Point Cloud"); | ShowImages.showWindow(viewer.getComponent(), "Point Cloud", true); | ||

System.out.println("Total points = "+cloud.size); | System.out.println("Total points = " + cloud.size); | ||

} | } | ||

} | } | ||

</syntaxhighlight> | </syntaxhighlight> | ||

Latest revision as of 15:54, 17 January 2022

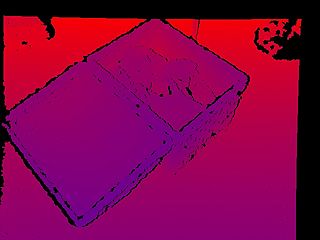

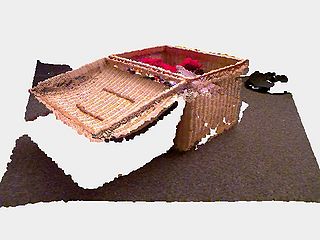

This example demonstrates how to create a 3D point cloud from a RGB-D sensor, such as the Kinect, and visualize it. RGB-D sensors have both visual and depth information. In this example the depth information is stored in a 16-bit image and the visual image in a standard color image. Calibration matching RGB an depth pixels to each other has already been done by the sensor.

Example Code:

Concepts:

- RGB-D

- Point clouds

Related Examples:

Example Code

/**

* Example of how to create a point cloud from a RGB-D (Kinect) sensor. Data is loaded from two files, one for the

* visual image and one for the depth image.

*

* @author Peter Abeles

*/

public class ExampleDepthPointCloud {

public static void main( String[] args ) {

String nameRgb = UtilIO.pathExample("kinect/basket/basket_rgb.png");

String nameDepth = UtilIO.pathExample("kinect/basket/basket_depth.png");

String nameCalib = UtilIO.pathExample("kinect/basket/visualdepth.yaml");

VisualDepthParameters param = CalibrationIO.load(nameCalib);

BufferedImage buffered = UtilImageIO.loadImageNotNull(nameRgb);

Planar<GrayU8> rgb = ConvertBufferedImage.convertFromPlanar(buffered, null, true, GrayU8.class);

GrayU16 depth = ConvertBufferedImage.convertFrom(UtilImageIO.loadImageNotNull(nameDepth), null, GrayU16.class);

var cloud = new DogArray<>(Point3D_F64::new);

var cloudColor = new DogArray<>(() -> new int[3]);

VisualDepthOps.depthTo3D(param.visualParam, rgb, depth, cloud, cloudColor);

PointCloudViewer viewer = VisualizeData.createPointCloudViewer();

viewer.setCameraHFov(PerspectiveOps.computeHFov(param.visualParam));

viewer.setTranslationStep(15);

for (int i = 0; i < cloud.size; i++) {

Point3D_F64 p = cloud.get(i);

int[] color = cloudColor.get(i);

int c = (color[0] << 16) | (color[1] << 8) | color[2];

viewer.addPoint(p.x, p.y, p.z, c);

}

viewer.getComponent().setPreferredSize(new Dimension(rgb.width, rgb.height));

// ---------- Display depth image

// use the actual max value in the image to maximize its appearance

int maxValue = ImageStatistics.max(depth);

BufferedImage depthOut = VisualizeImageData.disparity(depth, null, maxValue, 0);

ShowImages.showWindow(depthOut, "Depth Image", true);

// ---------- Display colorized point cloud

ShowImages.showWindow(viewer.getComponent(), "Point Cloud", true);

System.out.println("Total points = " + cloud.size);

}

}