Difference between revisions of "Example Stereo Visual Odometry"

From BoofCV

Jump to navigationJump to searchm |

m |

||

| (3 intermediate revisions by the same user not shown) | |||

| Line 7: | Line 7: | ||

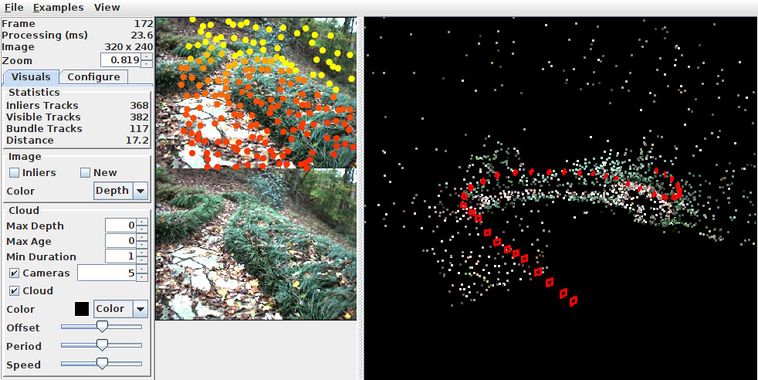

Stereo visual odometry estimates the camera's egomotion using a pair of calibrated cameras. Stereo camera systems are inherently more stable than monocular ones because the stereo pair provides good triangulation of image features and resolves the scale ambiguity. The example below shows how to use a high level interface with visual odometry algorithms. The basic high level interface hides internal data structures which are useful in many applications, which is why an optional interface is provided for accessing some of those structures. | Stereo visual odometry estimates the camera's egomotion using a pair of calibrated cameras. Stereo camera systems are inherently more stable than monocular ones because the stereo pair provides good triangulation of image features and resolves the scale ambiguity. The example below shows how to use a high level interface with visual odometry algorithms. The basic high level interface hides internal data structures which are useful in many applications, which is why an optional interface is provided for accessing some of those structures. | ||

Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0. | Example File: [https://github.com/lessthanoptimal/BoofCV/blob/v0.41/examples/src/main/java/boofcv/examples/sfm/ExampleVisualOdometryStereo.java ExampleVisualOdometryStereo.java] | ||

Concepts: | Concepts: | ||

| Line 33: | Line 33: | ||

*/ | */ | ||

public class ExampleVisualOdometryStereo { | public class ExampleVisualOdometryStereo { | ||

public static void main( String[] args ) { | |||

public static void main( String | |||

MediaManager media = DefaultMediaManager.INSTANCE; | MediaManager media = DefaultMediaManager.INSTANCE; | ||

| Line 41: | Line 39: | ||

// load camera description and the video sequence | // load camera description and the video sequence | ||

StereoParameters stereoParam = CalibrationIO.load(media.openFile(new File(directory , "stereo.yaml").getPath())); | StereoParameters stereoParam = CalibrationIO.load(media.openFile(new File(directory, "stereo.yaml").getPath())); | ||

SimpleImageSequence<GrayU8> video1 = media.openVideo( | SimpleImageSequence<GrayU8> video1 = media.openVideo( | ||

new File(directory , "left.mjpeg").getPath(), ImageType.single(GrayU8.class)); | new File(directory, "left.mjpeg").getPath(), ImageType.single(GrayU8.class)); | ||

SimpleImageSequence<GrayU8> video2 = media.openVideo( | SimpleImageSequence<GrayU8> video2 = media.openVideo( | ||

new File(directory,"right.mjpeg").getPath(), ImageType.single(GrayU8.class)); | new File(directory, "right.mjpeg").getPath(), ImageType.single(GrayU8.class)); | ||

var config = new ConfigStereoMonoTrackPnP(); | |||

// Specify which tracker and how it will behave | // Specify which tracker and how it will behave | ||

config.tracker.typeTracker = ConfigPointTracker.TrackerType.KLT; | |||

config.tracker.klt.pyramidLevels = ConfigDiscreteLevels.levels(4); | |||

config.tracker.klt.templateRadius = 4; | |||

config.tracker.klt.toleranceFB = 3; | |||

config.tracker.klt.pruneClose = true; | |||

config.tracker.detDesc.detectPoint.type = PointDetectorTypes.SHI_TOMASI; | |||

config.tracker.detDesc.detectPoint.shiTomasi.radius = 4; | |||

config.tracker.detDesc.detectPoint.general.maxFeatures = 300; | |||

config.tracker.detDesc.detectPoint.general.radius = 5; | |||

// We will estimate the location of features using block matching stereo | // We will estimate the location of features using block matching stereo | ||

config.disparity.errorType = DisparityError.CENSUS; | |||

config.disparity.disparityMin = 0; | |||

config.disparity.disparityRange = 50; | |||

config.disparity.regionRadiusX = 3; | |||

config.disparity.regionRadiusY = 3; | |||

config.disparity.maxPerPixelError = 30; | |||

config.disparity.texture = 0.05; | |||

config.disparity.validateRtoL = 1; | |||

config.disparity.subpixel = true; | |||

// Configurations related to how the structure is chained together frame to frame | // Configurations related to how the structure is chained together frame to frame | ||

config.scene.keyframes.geoMinCoverage = 0.4; | |||

config.scene.ransac.iterations = 200; | |||

config.scene.ransac.inlierThreshold = 1.0; | |||

// Declare each component then visual odometry | // Declare each component then visual odometry | ||

StereoVisualOdometry<GrayU8> visodom = FactoryVisualOdometry.stereoMonoPnP(config, GrayU8.class); | |||

StereoVisualOdometry<GrayU8> visodom = FactoryVisualOdometry.stereoMonoPnP( | |||

// Optionally dump verbose debugging information to stdout | // Optionally dump verbose debugging information to stdout | ||

// | // visodom.setVerbose(System.out, BoofMiscOps.hashSet(BoofVerbose.RUNTIME, VisualOdometry.VERBOSE_TRACKING)); | ||

// Pass in intrinsic/extrinsic calibration. | // Pass in intrinsic/extrinsic calibration. This can be changed in the future. | ||

visodom.setCalibration(stereoParam); | visodom.setCalibration(stereoParam); | ||

// Process the video sequence and output the location plus number of inliers | // Process the video sequence and output the location plus number of inliers | ||

long startTime = System.nanoTime(); | long startTime = System.nanoTime(); | ||

while( video1.hasNext() ) { | while (video1.hasNext()) { | ||

GrayU8 left = video1.next(); | GrayU8 left = video1.next(); | ||

GrayU8 right = video2.next(); | GrayU8 right = video2.next(); | ||

if( !visodom.process(left,right) ) { | if (!visodom.process(left, right)) { | ||

throw new RuntimeException("VO Failed!"); | throw new RuntimeException("VO Failed!"); | ||

} | } | ||

| Line 105: | Line 97: | ||

Vector3D_F64 T = leftToWorld.getT(); | Vector3D_F64 T = leftToWorld.getT(); | ||

System.out.printf("Location %8.2f %8.2f %8.2f | System.out.printf("Location %8.2f %8.2f %8.2f, %s\n", T.x, T.y, T.z, trackStats(visodom)); | ||

} | } | ||

System.out.printf("FPS %4.2f\n", video1.getFrameNumber()/((System.nanoTime()-startTime)*1e-9)); | System.out.printf("FPS %4.2f\n", video1.getFrameNumber()/((System.nanoTime() - startTime)*1e-9)); | ||

} | } | ||

| Line 113: | Line 105: | ||

* If the algorithm implements AccessPointTracks3D create a string which summarizing different tracking information | * If the algorithm implements AccessPointTracks3D create a string which summarizing different tracking information | ||

*/ | */ | ||

public static String trackStats( | public static String trackStats( VisualOdometry alg ) { | ||

if( !(alg instanceof AccessPointTracks3D)) | if (!(alg instanceof AccessPointTracks3D)) | ||

return ""; | return ""; | ||

var access = (AccessPointTracks3D)alg; | |||

int N = access.getTotalTracks(); | int N = access.getTotalTracks(); | ||

int totalInliers = 0; | |||

int totalNew = 0; | int totalNew = 0; | ||

for( int i = 0; i < N; i++ ) { | for (int i = 0; i < N; i++) { | ||

if( access.isTrackInlier(i) ) | if (access.isTrackInlier(i)) | ||

totalInliers++; | |||

if (access.isTrackNew(i)) | |||

totalNew++; | totalNew++; | ||

} | } | ||

return String.format("inlier %5.1f%% new %4d total %d", 100.0 * | return String.format("inlier: %5.1f%% new %4d total %d", 100.0*totalInliers/N, totalNew, N); | ||

} | } | ||

} | } | ||

</syntaxhighlight> | </syntaxhighlight> | ||

Latest revision as of 16:51, 2 September 2022

Stereo visual odometry estimates the camera's egomotion using a pair of calibrated cameras. Stereo camera systems are inherently more stable than monocular ones because the stereo pair provides good triangulation of image features and resolves the scale ambiguity. The example below shows how to use a high level interface with visual odometry algorithms. The basic high level interface hides internal data structures which are useful in many applications, which is why an optional interface is provided for accessing some of those structures.

Example File: ExampleVisualOdometryStereo.java

Concepts:

- Structure from Motion

- Geometric Vision

- Feature Tracking

Relevant Videos:

Related Tutorials/Example Code:

Example Code

/**

* Bare bones example showing how to estimate the camera's ego-motion using a stereo camera system. Additional

* information on the scene can be optionally extracted from the algorithm if it implements AccessPointTracks3D.

*

* @author Peter Abeles

*/

public class ExampleVisualOdometryStereo {

public static void main( String[] args ) {

MediaManager media = DefaultMediaManager.INSTANCE;

String directory = UtilIO.pathExample("vo/backyard/");

// load camera description and the video sequence

StereoParameters stereoParam = CalibrationIO.load(media.openFile(new File(directory, "stereo.yaml").getPath()));

SimpleImageSequence<GrayU8> video1 = media.openVideo(

new File(directory, "left.mjpeg").getPath(), ImageType.single(GrayU8.class));

SimpleImageSequence<GrayU8> video2 = media.openVideo(

new File(directory, "right.mjpeg").getPath(), ImageType.single(GrayU8.class));

var config = new ConfigStereoMonoTrackPnP();

// Specify which tracker and how it will behave

config.tracker.typeTracker = ConfigPointTracker.TrackerType.KLT;

config.tracker.klt.pyramidLevels = ConfigDiscreteLevels.levels(4);

config.tracker.klt.templateRadius = 4;

config.tracker.klt.toleranceFB = 3;

config.tracker.klt.pruneClose = true;

config.tracker.detDesc.detectPoint.type = PointDetectorTypes.SHI_TOMASI;

config.tracker.detDesc.detectPoint.shiTomasi.radius = 4;

config.tracker.detDesc.detectPoint.general.maxFeatures = 300;

config.tracker.detDesc.detectPoint.general.radius = 5;

// We will estimate the location of features using block matching stereo

config.disparity.errorType = DisparityError.CENSUS;

config.disparity.disparityMin = 0;

config.disparity.disparityRange = 50;

config.disparity.regionRadiusX = 3;

config.disparity.regionRadiusY = 3;

config.disparity.maxPerPixelError = 30;

config.disparity.texture = 0.05;

config.disparity.validateRtoL = 1;

config.disparity.subpixel = true;

// Configurations related to how the structure is chained together frame to frame

config.scene.keyframes.geoMinCoverage = 0.4;

config.scene.ransac.iterations = 200;

config.scene.ransac.inlierThreshold = 1.0;

// Declare each component then visual odometry

StereoVisualOdometry<GrayU8> visodom = FactoryVisualOdometry.stereoMonoPnP(config, GrayU8.class);

// Optionally dump verbose debugging information to stdout

// visodom.setVerbose(System.out, BoofMiscOps.hashSet(BoofVerbose.RUNTIME, VisualOdometry.VERBOSE_TRACKING));

// Pass in intrinsic/extrinsic calibration. This can be changed in the future.

visodom.setCalibration(stereoParam);

// Process the video sequence and output the location plus number of inliers

long startTime = System.nanoTime();

while (video1.hasNext()) {

GrayU8 left = video1.next();

GrayU8 right = video2.next();

if (!visodom.process(left, right)) {

throw new RuntimeException("VO Failed!");

}

Se3_F64 leftToWorld = visodom.getCameraToWorld();

Vector3D_F64 T = leftToWorld.getT();

System.out.printf("Location %8.2f %8.2f %8.2f, %s\n", T.x, T.y, T.z, trackStats(visodom));

}

System.out.printf("FPS %4.2f\n", video1.getFrameNumber()/((System.nanoTime() - startTime)*1e-9));

}

/**

* If the algorithm implements AccessPointTracks3D create a string which summarizing different tracking information

*/

public static String trackStats( VisualOdometry alg ) {

if (!(alg instanceof AccessPointTracks3D))

return "";

var access = (AccessPointTracks3D)alg;

int N = access.getTotalTracks();

int totalInliers = 0;

int totalNew = 0;

for (int i = 0; i < N; i++) {

if (access.isTrackInlier(i))

totalInliers++;

if (access.isTrackNew(i))

totalNew++;

}

return String.format("inlier: %5.1f%% new %4d total %d", 100.0*totalInliers/N, totalNew, N);

}

}