Difference between revisions of "Example Scene Classification"

From BoofCV

Jump to navigationJump to searchm |

m |

||

| Line 8: | Line 8: | ||

Example Code: | Example Code: | ||

* [https://github.com/lessthanoptimal/BoofCV/blob/v0. | * [https://github.com/lessthanoptimal/BoofCV/blob/v0.37/examples/src/main/java/boofcv/examples/recognition/ExampleClassifySceneKnn.java ExampleClassifySceneKnn.java ] | ||

Concepts: | Concepts: | ||

| Line 65: | Line 65: | ||

// Algorithms | // Algorithms | ||

ClusterVisualWords cluster; | ClusterVisualWords cluster; | ||

DescribeImageDense<GrayU8,TupleDesc_F64> describeImage; | DescribeImageDense<GrayU8, TupleDesc_F64> describeImage; | ||

NearestNeighbor<HistogramScene> nn; | NearestNeighbor<HistogramScene> nn; | ||

ClassifierKNearestNeighborsBow<GrayU8,TupleDesc_F64> classifier; | ClassifierKNearestNeighborsBow<GrayU8, TupleDesc_F64> classifier; | ||

public ExampleClassifySceneKnn(final DescribeImageDense<GrayU8, TupleDesc_F64> describeImage, | public ExampleClassifySceneKnn( final DescribeImageDense<GrayU8, TupleDesc_F64> describeImage, | ||

ComputeClusters<double[]> clusterer, | |||

NearestNeighbor<HistogramScene> nn ) { | |||

this.describeImage = describeImage; | this.describeImage = describeImage; | ||

this.cluster = new ClusterVisualWords(clusterer, describeImage.createDescription().size(),0xFEEDBEEF); | this.cluster = new ClusterVisualWords(clusterer, describeImage.createDescription().size(), 0xFEEDBEEF); | ||

this.nn = nn; | this.nn = nn; | ||

} | } | ||

| Line 86: | Line 86: | ||

// Either load pre-computed words or compute the words from the training images | // Either load pre-computed words or compute the words from the training images | ||

AssignCluster<double[]> assignment; | AssignCluster<double[]> assignment; | ||

if( new File(CLUSTER_FILE_NAME).exists() ) { | if (new File(CLUSTER_FILE_NAME).exists()) { | ||

assignment = UtilIO.load(CLUSTER_FILE_NAME); | assignment = UtilIO.load(CLUSTER_FILE_NAME); | ||

} else { | } else { | ||

| Line 94: | Line 94: | ||

// Use these clusters to assign features to words | // Use these clusters to assign features to words | ||

FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment,HISTOGRAM_HARD); | FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment, HISTOGRAM_HARD); | ||

// Storage for the work histogram in each image in the training set and their label | // Storage for the work histogram in each image in the training set and their label | ||

List<HistogramScene> memory; | List<HistogramScene> memory; | ||

if( !new File(HISTOGRAM_FILE_NAME).exists() ) { | if (!new File(HISTOGRAM_FILE_NAME).exists()) { | ||

System.out.println(" computing histograms"); | System.out.println(" computing histograms"); | ||

memory = computeHistograms(featuresToHistogram); | memory = computeHistograms(featuresToHistogram); | ||

UtilIO.save(memory,HISTOGRAM_FILE_NAME); | UtilIO.save(memory, HISTOGRAM_FILE_NAME); | ||

} | } | ||

} | } | ||

| Line 114: | Line 114: | ||

// computes features in the training image set | // computes features in the training image set | ||

List<TupleDesc_F64> features = new ArrayList<>(); | List<TupleDesc_F64> features = new ArrayList<>(); | ||

for( String scene : train.keySet() ) { | for (String scene : train.keySet()) { | ||

List<String> imagePaths = train.get(scene); | List<String> imagePaths = train.get(scene); | ||

System.out.println(" " + scene); | System.out.println(" " + scene); | ||

for( String path : imagePaths ) { | for (String path : imagePaths) { | ||

GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class); | GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class); | ||

describeImage.process(image); | describeImage.process(image); | ||

// the descriptions will get recycled on the next call, so create a copy | // the descriptions will get recycled on the next call, so create a copy | ||

for( TupleDesc_F64 d : describeImage.getDescriptions() ) { | for (TupleDesc_F64 d : describeImage.getDescriptions()) { | ||

features.add( d.copy() ); | features.add(d.copy()); | ||

} | } | ||

} | } | ||

| Line 147: | Line 147: | ||

AssignCluster<double[]> assignment = UtilIO.load(CLUSTER_FILE_NAME); | AssignCluster<double[]> assignment = UtilIO.load(CLUSTER_FILE_NAME); | ||

FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment,HISTOGRAM_HARD); | FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment, HISTOGRAM_HARD); | ||

| Line 162: | Line 162: | ||

* contains the word histogram and the scene that the histogram belongs to. | * contains the word histogram and the scene that the histogram belongs to. | ||

*/ | */ | ||

private List<HistogramScene> computeHistograms(FeatureToWordHistogram_F64 featuresToHistogram ) { | private List<HistogramScene> computeHistograms( FeatureToWordHistogram_F64 featuresToHistogram ) { | ||

List<String> scenes = getScenes(); | List<String> scenes = getScenes(); | ||

| Line 169: | Line 169: | ||

memory = new ArrayList<>(); | memory = new ArrayList<>(); | ||

for( int sceneIndex = 0; sceneIndex < scenes.size(); sceneIndex++ ) { | for (int sceneIndex = 0; sceneIndex < scenes.size(); sceneIndex++) { | ||

String scene = scenes.get(sceneIndex); | String scene = scenes.get(sceneIndex); | ||

System.out.println(" " + scene); | System.out.println(" " + scene); | ||

| Line 180: | Line 180: | ||

featuresToHistogram.reset(); | featuresToHistogram.reset(); | ||

describeImage.process(image); | describeImage.process(image); | ||

for ( TupleDesc_F64 d : describeImage.getDescriptions() ) { | for (TupleDesc_F64 d : describeImage.getDescriptions()) { | ||

featuresToHistogram.addFeature(d); | featuresToHistogram.addFeature(d); | ||

} | } | ||

| Line 201: | Line 201: | ||

@Override | @Override | ||

protected int classify(String path) { | protected int classify( String path ) { | ||

GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class); | GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class); | ||

| Line 207: | Line 207: | ||

} | } | ||

public static void main(String[] args) { | public static void main( String[] args ) { | ||

ConfigDenseSurfFast surfFast = new ConfigDenseSurfFast(new DenseSampling(8,8)); | ConfigDenseSurfFast surfFast = new ConfigDenseSurfFast(new DenseSampling(8, 8)); | ||

// ConfigDenseSurfStable surfStable = new ConfigDenseSurfStable(new DenseSampling(8,8)); | |||

// ConfigDenseSift sift = new ConfigDenseSift(new DenseSampling(6,6)); | |||

// ConfigDenseHoG hog = new ConfigDenseHoG(); | |||

DescribeImageDense<GrayU8,TupleDesc_F64> desc = | DescribeImageDense<GrayU8, TupleDesc_F64> desc = | ||

FactoryDescribeImageDense.surfFast(surfFast, GrayU8.class); | FactoryDescribeImageDense.surfFast(surfFast, GrayU8.class); | ||

// FactoryDescribeImageDense.surfStable(surfStable, GrayU8.class); | // FactoryDescribeImageDense.surfStable(surfStable, GrayU8.class); | ||

| Line 225: | Line 225: | ||

int pointDof = desc.createDescription().size(); | int pointDof = desc.createDescription().size(); | ||

NearestNeighbor<HistogramScene> nn = FactoryNearestNeighbor.exhaustive(new KdTreeHistogramScene_F64(pointDof)); | NearestNeighbor<HistogramScene> nn = FactoryNearestNeighbor.exhaustive(new KdTreeHistogramScene_F64(pointDof)); | ||

ExampleClassifySceneKnn example = new ExampleClassifySceneKnn(desc,clusterer,nn); | ExampleClassifySceneKnn example = new ExampleClassifySceneKnn(desc, clusterer, nn); | ||

File trainingDir = new File(UtilIO.pathExample("learning/scene/train")); | File trainingDir = new File(UtilIO.pathExample("learning/scene/train")); | ||

File testingDir = new File(UtilIO.pathExample("learning/scene/test")); | File testingDir = new File(UtilIO.pathExample("learning/scene/test")); | ||

if( !trainingDir.exists() || !testingDir.exists() ) { | if (!trainingDir.exists() || !testingDir.exists()) { | ||

String addressSrc = "http://boofcv.org/notwiki/largefiles/bow_data_v001.zip"; | String addressSrc = "http://boofcv.org/notwiki/largefiles/bow_data_v001.zip"; | ||

File dst = new File(trainingDir.getParentFile(),"bow_data_v001.zip"); | File dst = new File(trainingDir.getParentFile(), "bow_data_v001.zip"); | ||

try { | try { | ||

DeepBoofDataBaseOps.download(addressSrc, dst); | DeepBoofDataBaseOps.download(addressSrc, dst); | ||

DeepBoofDataBaseOps.decompressZip(dst, dst.getParentFile(), true); | DeepBoofDataBaseOps.decompressZip(dst, dst.getParentFile(), true); | ||

System.out.println("Download complete!"); | System.out.println("Download complete!"); | ||

} catch( IOException e ) { | } catch (IOException e) { | ||

throw new | throw new UncheckedIOException(e); | ||

} | } | ||

} else { | } else { | ||

System.out.println("Delete and download again if there are file not found errors"); | System.out.println("Delete and download again if there are file not found errors"); | ||

System.out.println(" "+trainingDir); | System.out.println(" " + trainingDir); | ||

System.out.println(" "+testingDir); | System.out.println(" " + testingDir); | ||

} | } | ||

| Line 260: | Line 260: | ||

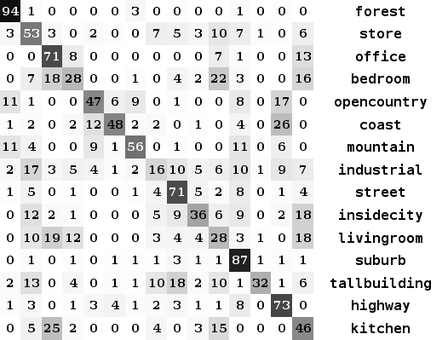

// Not the best coloration scheme... perfect = red diagonal and blue elsewhere. | // Not the best coloration scheme... perfect = red diagonal and blue elsewhere. | ||

ShowImages.showWindow(new ConfusionMatrixPanel( | ShowImages.showWindow(new ConfusionMatrixPanel( | ||

confusion.getMatrix(),example.getScenes(), 400, true), "Confusion Matrix", true); | confusion.getMatrix(), example.getScenes(), 400, true), "Confusion Matrix", true); | ||

// For SIFT descriptor the accuracy is 54.0% | // For SIFT descriptor the accuracy is 54.0% | ||

Revision as of 18:32, 21 December 2020

Scene classification is the problem where you are presented with an image and you need to classify it as belonging to a known set. The example below demonstrates how to perform scene classification using dense SURF features and a nearest neighbour classifier. See code documentation for a more detailed discussion.

Example Code:

Concepts:

- Scene Classification

- Dense Image Features

- Clustering

- k-NN classifier

Related Examples:

Example Code

/**

* <p>

* Example of how to train a K-NN bow-of-word classifier for scene recognition. The resulting classifier

* produces results which are correct 52.2% of the time. To provide a point of comparison, randomly selecting

* a scene is about 6.7% accurate, SVM One vs One RBF classifier can produce accuracy of around 74% and

* other people using different techniques claim to have achieved around 85% accurate with more advanced

* techniques.

* </p>

*

* Training Steps:

* <ol>

* <li>Compute dense SURF features across the training data set.</li>

* <li>Cluster using k-means to create works.</li>

* <li>For each image compute the histogram of words found in the image</li>

* <li>Save word histograms and image scene labels in a classifier</li>

* </ol>

*

* Testing Steps:

* <ol>

* <li>For each image in the testing data set compute its histogram</li>

* <li>Look up the k-nearest-neighbors for that histogram</li>

* <li>Classify an image by by selecting the scene type with the most neighbors</li>

* </ol>

*

* <p>NOTE: Scene recognition is still very much a work in progress in BoofCV and the code is likely to be

* significantly modified in the future.</p>

*

* @author Peter Abeles

*/

public class ExampleClassifySceneKnn extends LearnSceneFromFiles {

// Tuning parameters

public static int NUMBER_OF_WORDS = 100;

public static boolean HISTOGRAM_HARD = true;

public static int NUM_NEIGHBORS = 10;

public static int MAX_KNN_ITERATIONS = 100;

// Files intermediate results are stored in

public static final String CLUSTER_FILE_NAME = "clusters.obj";

public static final String HISTOGRAM_FILE_NAME = "histograms.obj";

// Algorithms

ClusterVisualWords cluster;

DescribeImageDense<GrayU8, TupleDesc_F64> describeImage;

NearestNeighbor<HistogramScene> nn;

ClassifierKNearestNeighborsBow<GrayU8, TupleDesc_F64> classifier;

public ExampleClassifySceneKnn( final DescribeImageDense<GrayU8, TupleDesc_F64> describeImage,

ComputeClusters<double[]> clusterer,

NearestNeighbor<HistogramScene> nn ) {

this.describeImage = describeImage;

this.cluster = new ClusterVisualWords(clusterer, describeImage.createDescription().size(), 0xFEEDBEEF);

this.nn = nn;

}

/**

* Process all the data in the training data set to learn the classifications. See code for details.

*/

public void learnAndSave() {

System.out.println("======== Learning Classifier");

// Either load pre-computed words or compute the words from the training images

AssignCluster<double[]> assignment;

if (new File(CLUSTER_FILE_NAME).exists()) {

assignment = UtilIO.load(CLUSTER_FILE_NAME);

} else {

System.out.println(" Computing clusters");

assignment = computeClusters();

}

// Use these clusters to assign features to words

FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment, HISTOGRAM_HARD);

// Storage for the work histogram in each image in the training set and their label

List<HistogramScene> memory;

if (!new File(HISTOGRAM_FILE_NAME).exists()) {

System.out.println(" computing histograms");

memory = computeHistograms(featuresToHistogram);

UtilIO.save(memory, HISTOGRAM_FILE_NAME);

}

}

/**

* Extract dense features across the training set. Then clusters are found within those features.

*/

private AssignCluster<double[]> computeClusters() {

System.out.println("Image Features");

// computes features in the training image set

List<TupleDesc_F64> features = new ArrayList<>();

for (String scene : train.keySet()) {

List<String> imagePaths = train.get(scene);

System.out.println(" " + scene);

for (String path : imagePaths) {

GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class);

describeImage.process(image);

// the descriptions will get recycled on the next call, so create a copy

for (TupleDesc_F64 d : describeImage.getDescriptions()) {

features.add(d.copy());

}

}

}

// add the features to the overall list which the clusters will be found inside of

for (int i = 0; i < features.size(); i++) {

cluster.addReference(features.get(i));

}

System.out.println("Clustering");

// Find the clusters. This can take a bit

cluster.process(NUMBER_OF_WORDS);

UtilIO.save(cluster.getAssignment(), CLUSTER_FILE_NAME);

return cluster.getAssignment();

}

public void loadAndCreateClassifier() {

// load results from a file

List<HistogramScene> memory = UtilIO.load(HISTOGRAM_FILE_NAME);

AssignCluster<double[]> assignment = UtilIO.load(CLUSTER_FILE_NAME);

FeatureToWordHistogram_F64 featuresToHistogram = new FeatureToWordHistogram_F64(assignment, HISTOGRAM_HARD);

// Provide the training results to K-NN and it will preprocess these results for quick lookup later on

// Can use this classifier with saved results and avoid the

classifier = new ClassifierKNearestNeighborsBow<>(nn, describeImage, featuresToHistogram);

classifier.setClassificationData(memory, getScenes().size());

classifier.setNumNeighbors(NUM_NEIGHBORS);

}

/**

* For all the images in the training data set it computes a {@link HistogramScene}. That data structure

* contains the word histogram and the scene that the histogram belongs to.

*/

private List<HistogramScene> computeHistograms( FeatureToWordHistogram_F64 featuresToHistogram ) {

List<String> scenes = getScenes();

List<HistogramScene> memory;// Processed results which will be passed into the k-NN algorithm

memory = new ArrayList<>();

for (int sceneIndex = 0; sceneIndex < scenes.size(); sceneIndex++) {

String scene = scenes.get(sceneIndex);

System.out.println(" " + scene);

List<String> imagePaths = train.get(scene);

for (String path : imagePaths) {

GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class);

// reset before processing a new image

featuresToHistogram.reset();

describeImage.process(image);

for (TupleDesc_F64 d : describeImage.getDescriptions()) {

featuresToHistogram.addFeature(d);

}

featuresToHistogram.process();

// The histogram is already normalized so that it sums up to 1. This provides invariance

// against the overall number of features changing.

double[] histogram = featuresToHistogram.getHistogram();

// Create the data structure used by the KNN classifier

HistogramScene imageHist = new HistogramScene(NUMBER_OF_WORDS);

imageHist.setHistogram(histogram);

imageHist.type = sceneIndex;

memory.add(imageHist);

}

}

return memory;

}

@Override

protected int classify( String path ) {

GrayU8 image = UtilImageIO.loadImage(path, GrayU8.class);

return classifier.classify(image);

}

public static void main( String[] args ) {

ConfigDenseSurfFast surfFast = new ConfigDenseSurfFast(new DenseSampling(8, 8));

// ConfigDenseSurfStable surfStable = new ConfigDenseSurfStable(new DenseSampling(8,8));

// ConfigDenseSift sift = new ConfigDenseSift(new DenseSampling(6,6));

// ConfigDenseHoG hog = new ConfigDenseHoG();

DescribeImageDense<GrayU8, TupleDesc_F64> desc =

FactoryDescribeImageDense.surfFast(surfFast, GrayU8.class);

// FactoryDescribeImageDense.surfStable(surfStable, GrayU8.class);

// FactoryDescribeImageDense.sift(sift, GrayU8.class);

// FactoryDescribeImageDense.hog(hog, ImageType.single(GrayU8.class));

ComputeClusters<double[]> clusterer = FactoryClustering.kMeans_F64(null, MAX_KNN_ITERATIONS, 20, 1e-6);

clusterer.setVerbose(true);

int pointDof = desc.createDescription().size();

NearestNeighbor<HistogramScene> nn = FactoryNearestNeighbor.exhaustive(new KdTreeHistogramScene_F64(pointDof));

ExampleClassifySceneKnn example = new ExampleClassifySceneKnn(desc, clusterer, nn);

File trainingDir = new File(UtilIO.pathExample("learning/scene/train"));

File testingDir = new File(UtilIO.pathExample("learning/scene/test"));

if (!trainingDir.exists() || !testingDir.exists()) {

String addressSrc = "http://boofcv.org/notwiki/largefiles/bow_data_v001.zip";

File dst = new File(trainingDir.getParentFile(), "bow_data_v001.zip");

try {

DeepBoofDataBaseOps.download(addressSrc, dst);

DeepBoofDataBaseOps.decompressZip(dst, dst.getParentFile(), true);

System.out.println("Download complete!");

} catch (IOException e) {

throw new UncheckedIOException(e);

}

} else {

System.out.println("Delete and download again if there are file not found errors");

System.out.println(" " + trainingDir);

System.out.println(" " + testingDir);

}

example.loadSets(trainingDir, null, testingDir);

// train the classifier

example.learnAndSave();

// now load it for evaluation purposes from the files

example.loadAndCreateClassifier();

// test the classifier on the test set

Confusion confusion = example.evaluateTest();

confusion.getMatrix().print();

System.out.println("Accuracy = " + confusion.computeAccuracy());

// Show confusion matrix

// Not the best coloration scheme... perfect = red diagonal and blue elsewhere.

ShowImages.showWindow(new ConfusionMatrixPanel(

confusion.getMatrix(), example.getScenes(), 400, true), "Confusion Matrix", true);

// For SIFT descriptor the accuracy is 54.0%

// For "fast" SURF descriptor the accuracy is 52.2%

// For "stable" SURF descriptor the accuracy is 49.4%

// For HOG 53.3%

// SURF results are interesting. "Stable" is significantly better than "fast"!

// One explanation is that the descriptor for "fast" samples a smaller region than "stable", by a

// couple of pixels at scale of 1. Thus there is less overlap between the features.

// Reducing the size of "stable" to 0.95 does slightly improve performance to 50.5%, can't scale it down

// much more without performance going down

}

}