Tutorial Kinect

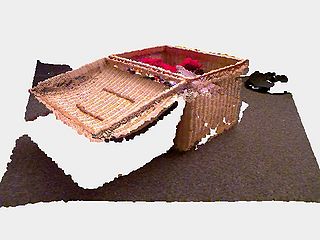

The Kinect a popular RGB-D sensor which provides both video and depth information. BoofCV provides support for the [1] driver directly through several helper function. The project already provides a Java interface. The Kinect is much easier to work with than stereo cameras and provides similar information. The major downside to working with a Kinect sensor is that they can't be use outdoors and have a more limited range.

To access helper function for Kinect in BoofCV go to the boofcv/integration/openkinect directory. Several useful examples and utilities are provided in the openkinect/examples directory, while the main source code is in openkinect/src directory. To use these functions, be sure to include the BoofCV_OpenKinect.jar in your project. This can be downloaded precompile or you can compile it yourself using ant.

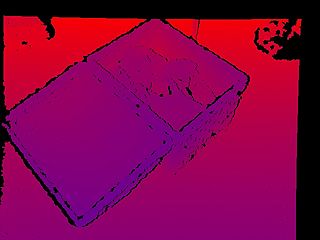

Two classes are provided in the Kinect jar, StreamOpenKinectRgbDepth and UtilOpenKinect. StreamOpenKinectRgbDepth is a high level interface for streaming data from a kinect using OpenKinect. UtilOpenKinect contains functions for manipulating, reading, and saving Kinect data. The depth image is stored as an unsigned 16-bit gray scale image (GrayU16) and the RGB in any standard image format.

Related Examples:

Usage Example

Many more examples are provided in the openkinect/examples directory. Here is one showing typical usage.

/**

* Example demonstrating how to process and display data from the Kinect.

*

* @author Peter Abeles

*/

public class OpenKinectStreamingTest {

{

// be sure to set OpenKinectExampleParam.PATH_TO_SHARED_LIBRARY to the location of your shared library!

NativeLibrary.addSearchPath("freenect", OpenKinectExampleParam.PATH_TO_SHARED_LIBRARY);

}

Planar<GrayU8> rgb = new Planar<GrayU8>(GrayU8.class,1,1,3);

GrayU16 depth = new GrayU16(1,1);

BufferedImage outRgb;

ImagePanel guiRgb;

BufferedImage outDepth;

ImagePanel guiDepth;

public void process() {

Context kinect = Freenect.createContext();

if( kinect.numDevices() < 0 )

throw new RuntimeException("No kinect found!");

Device device = kinect.openDevice(0);

device.setDepthFormat(DepthFormat.REGISTERED);

device.setVideoFormat(VideoFormat.RGB);

device.startDepth(new DepthHandler() {

@Override

public void onFrameReceived(FrameMode mode, ByteBuffer frame, int timestamp) {

processDepth(mode,frame,timestamp);

}

});

device.startVideo(new VideoHandler() {

@Override

public void onFrameReceived(FrameMode mode, ByteBuffer frame, int timestamp) {

processRgb(mode,frame,timestamp);

}

});

long starTime = System.currentTimeMillis();

while( starTime+100000 > System.currentTimeMillis() ) {}

System.out.println("100 Seconds elapsed");

device.stopDepth();

device.stopVideo();

device.close();

}

protected void processDepth( FrameMode mode, ByteBuffer frame, int timestamp ) {

System.out.println("Got depth! "+timestamp);

if( outDepth == null ) {

depth.reshape(mode.getWidth(),mode.getHeight());

outDepth = new BufferedImage(depth.width,depth.height,BufferedImage.TYPE_INT_RGB);

guiDepth = ShowImages.showWindow(outDepth,"Depth Image");

}

UtilOpenKinect.bufferDepthToU16(frame, depth);

// VisualizeImageData.grayUnsigned(depth,outDepth,UtilOpenKinect.FREENECT_DEPTH_MM_MAX_VALUE);

VisualizeImageData.disparity(depth, outDepth, 0, UtilOpenKinect.FREENECT_DEPTH_MM_MAX_VALUE,0);

guiDepth.repaint();

}

protected void processRgb( FrameMode mode, ByteBuffer frame, int timestamp ) {

if( mode.getVideoFormat() != VideoFormat.RGB ) {

System.out.println("Bad rgb format!");

}

System.out.println("Got rgb! "+timestamp);

if( outRgb == null ) {

rgb.reshape(mode.getWidth(),mode.getHeight());

outRgb = new BufferedImage(rgb.width,rgb.height,BufferedImage.TYPE_INT_RGB);

guiRgb = ShowImages.showWindow(outRgb,"RGB Image");

}

UtilOpenKinect.bufferRgbToMsU8(frame, rgb);

ConvertBufferedImage.convertTo_U8(rgb,outRgb,true);

guiRgb.repaint();

}

public static void main( String args[] ) {

OpenKinectStreamingTest app = new OpenKinectStreamingTest();

app.process();

}

}