Tutorial Camera Calibration

- Different types of supported planar calibration grids

- Calib target chess small.png

Chessboard pattern

- Calib target square small.png

Square grid pattern

Camera calibration is the process of estimating intrinsic and/or extrinsic parameters. Intrinsic parameters deal with the camera's internal characteristics, such as, its focal length, skew, distortion, and image center. Extrinsic parameters describe its position and orientation in the world. Knowing intrinsic parameters is an essential first step for 3D computer vision, as it allows you to estimate the scene's structure in Euclidean space and removes lens distortion, which degraces accuracy.

BoofCV provides fully automated calibration from planar targets with square and checkered patterns, that can be easily printed. It is also possible to use 3D calibration targets or other types of calibration grids, provided that the user writes code for detecting the calibration points. This tutorial only discusses the fully automated calibration procedure for planar targets.

Calibration in BoofCV is heavily influenced by Zhengyou Zhang's 1999 paper, "Flexible Camera Calibration By Viewing a Plane From Unknown Orientations". See his webpage below for the paper and more technical and theoretical information on camera calibration. A link is also provided to a popular matlab calibration toolbox.

It is possible to either manually collect images and process them or to use a fully automated assisted calibration. Both approaches are described below.

References:

- Zhang's Camera Calibration

- CalTech's Matlab Calibration Toolbox

- R. Hartley, and A. Zisserman, "Multiple View Geometry in Computer Vision"

Quick Links

Example Code

- Calibrate Monocular Camera

- Calibrate Stereo Camera

- Remove Lens Distortion

- Rectify Calibrated Stereo

- Rectify Uncalibrated Stereo

The Calibration Process

In this section, the camera calibration procedure is broken down into steps and explained. Almost identical steps are followed for calibration a single camera or a stereo camera system. First a quick overview:

- Select a pattern, download, and print

- Mount the pattern onto a rigid flat surface

- Take many pictures of the target at different orientations and distances

- Download pictures to compute and select ones that are in focus

- Use provided examples to automatically detect calibration target and compute parameters

- Move calibration file to a safe location

Which calibration target you use is a matter of personal preference. Chessboard patterns tend to produce slightly more accurate results.

For printable calibration documents see the "[Camera_Calibration_Targets|Calibration Targets]" page. When using the example code or the assisted calibration application you need to make sure you correctly describe the target type that you are looking for.

The target needs to be mounted on a flat surface and any warping will decrease calibration accuracy. An ideal surface will be rigid and smooth. Thick foam poster board is easily obtainable and works well. I've also used clipboards with some minor modifications. Cardboard is OK if high precision isn't required well, it will warp over time.

General Advice:

- If possible turn autofocus on your camera off.

- Avoid strong lighting which can make detection difficult an less accurate

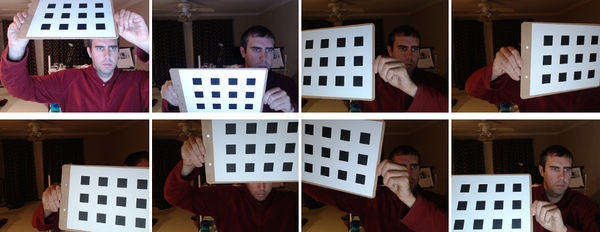

Calibration Target Placement

When collecting calibration images it is best to take a diverse set of in focus image which cover the entire image, especially the image border. An example of how one can do this is down in the figure above. One problem when calibrating a camera is that the residual error can't be trusted as a way to verify correctness. For example, if all the pictures are taken in one region the results will be biased, even if the residual error is low. Also avoid extreme angles or changes distance should be avoided.

A good way to check to see if calibration was done correctly is to see if straight edges are straight. In an undistorted image try moving a ruler to the image border and see if its warped. For stereo images you can see if rectification is correct by clicking on an easily recognizable feature and seeing if it is at the same y-coordinate in the other image.

Assisted Calibration Application

A calibration application is provided with BoofCV. You can find it in the "boofcv/applications" directory. Here's how you build and run it:

cd boofcv/applications

gradle applicationsJar

java -jar applications.jar CameraCalibration

That will print out instructions. There are two methods of input with that application. Images from a directory or video feed from a webcam.

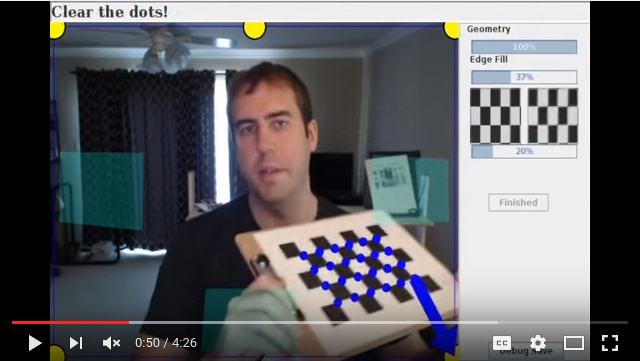

Webcam calibration allows you to capture images live and provides assistance to ensure that you capture good high quality images. It will guide you to take images near the image border, with sufficient slant so that the linear solver works, that you hold it still to reduce blur, and it automatically selects the sharpest least blurred images. Once you are done capturing images you're then presented with another view where you can view the results for individual images and across the whole dataset.

Calibration from a set of images:

java -jar applications.jar CameraCalibration --Directory=images/ CHESSBOARD --Grid=7:5

Calibration from a webcam:

java -jar applications.jar CameraCalibration --Camera=0 --Resolution=640:480 CHESSBOARD --Grid=7:5

Results will be stored in the "calibration_data" directory. This includes found calibration parameters along with the collected images.

Custom Video Sources

The assisted calibration by default uses video feed from [Webcam Capture]. With a little bit of coding it's easy to add video sources from really anything as long as you can get a BufferedImage. Take a look at CameraCalibration.

Coding Up Your Own Application

Coding up your own software to load and process calibration images is also easy, but more tedious. The calibration application also doesn't support stereo cameras yet.

Example Code:

Removing Lens Distortion from Images

Most computer vision algorithms assume a pin hole camera model. Undistorting an image allows you to treat the image like a pin-hole camera and can make it visually more appearing since the borders are no longer heavily distorted. This operation can be relatively expensive. Internally most algorithm in BoofCV detect features in the distorted image then undistort individual features

Example Code:

Stereo Rectification

Stereo rectification is the process of distorting two images such that both their epipoles are at infinity, typically along the x-axis. When this happens the epipolar lines are all parallel to each other simplifying the problem of finding feature correspondences to searching along the image axis. Many stereo algorithms require images to be rectified first.

Rectification can be done on calibrated or uncalibrated images. Calibration in this case refers to the stereo baseline (extrinsic parameters between two cameras) to be known. Although in practice it is often required that lens distortion be removed from the images even in the "uncalibrated" case.

The uncalibrated case can be done using automatically detected and associated features, however it is much tricker to get right than the calibrated case. Any small association error will cause a large error in rectification. Even if a state of the art and robust feature is used (e.g. SURF) and matches are pruned using the epipolar constraint, this alone will not be enough. Additional knowledge of the scene needs to be taken in account.